Eurorack modules like the Make Noise Spectraphon and the Rossum Panharmonium offer a powerful set of artistic and computational tools that sit somewhere between synthesis and sampling. But these modules are just the latest chapter in a rich history of spectral music which has its roots in a Cold War-era attempt to regulate the proliferation of nuclear weapons and the unpublished work of a German mathematician, Carl Friedrich Gauss, in 1805.

Drawing on new computer-assisted composition techniques, in the late 20th century, composers such as Claude Vivier, Gérard Grisey, Tristan Murail, James Tenney, and Michaël Lévinas created a style of music that incorporates acoustic elements from the natural world without ever using direct sampling. In so doing they created an entirely new way to conceptualize musical tone, harmony, and spectrum. In the 21st century these techniques have grown into an ecosystem of devices, VSTs, and music programming environments which have influenced popular music as well. Whereas spectralism was once restricted to specialized research institutions—such as Institut de Recherche et Coordination Acoustique/Musique (IRCAM) in Paris, where powerful computers made the computationally intensive tasks tractable—the advent of powerful microcontrollers such as the ARM Cortex series has brought this technique into the home studio.

In this article we’ll trace this history of spectralism from its origins and guess at what might come next.

What are Sound Spectra?

The term spectralism is often used in connection with a group of artists associated with the French acoustic research institute IRCAM in the 1970s. But in the broader sense, spectralism, as the name suggests, is music that draws inspiration from sound spectra. But what is a sound spectrum?

A sound spectrum is a representation of the different frequencies present in a sound. Imagine striking a piano key; while you hear a single note, what you're actually hearing is a blend of several frequencies, each with varying amplitudes. The dominant frequency (often the loudest frequency, referred to as the fundamental) usually determines the pitch we recognize, while the others, called overtones or harmonics, are higher tones that blend together to add richness and character to that note.

Sine waves are conceptually the basic building blocks of all periodic sound. When isolated, a sine wave sounds kind of digital—pure and colorless. Imagine a flawless, uninterrupted tone without any discernible texture.

Sounds in the real world are rarely so simple, but mathematically we can represent complex sounds as just many simultaneous sine waves. It's this combination of sine waves that our ears interpret as timbre, which can be thought of as the unique sonic fingerprint of a sound. Timbre gives a sound its distinct identity; it's why a guitar sounds different from a flute, even if they play the exact same note.

This model is an oversimplification, because pitch is fundamentally a psychoacoustic phenomenon. The epitomic example is the so-called “missing fundamental,” which is a fundamental frequency which is heard even when no corresponding sound is present, illustrating that the human brain constructs pitch from sound based on a hidden heuristic.

Moreover, sounds aren't made up of just repeating patterns. They also have a noise component—random, aperiodic sounds that aren't bound by a consistent frequency. And when it comes to musical tones, especially from instruments, they're ever-evolving. These tones aren't static; they ebb and flow, adding nuances, inflections, and expressiveness that make them so captivating. This fluidity and richness are partly why simple electronic tones can sometimes come off as flat or strident when compared to the warm, dynamic sounds of acoustic instruments.

Machine Listening

Unlike light spectroscopy, which was studied by Isaac Newton in the 1670s using glass prisms, there is no physical material that can refract sound into its component parts. Although early forms of audio synthesis date back to the late 19th century, it wasn’t until 1965 that a computational method was perfected to tease apart the frequencies that make up complex sounds.

The solution—in the form of the Cooley–Tukey algorithm—surprisingly, wasn’t actually invented to analyze audible sound spectra. In 1963, President Kennedy's Science Advisory Committee needed a way to remotely monitor arrays of seismometers to detect nuclear-weapon tests in the Soviet Union. However, underground weapons tests had to be distinguished from naturally occurring earthquakes. Because explosions release energy in every direction outwards from a signal point—whereas most earthquakes are caused by the lateral slippage of tectonic plates—explosions and natural earthquakes each have a unique acoustic signature composed of different waveforms.

Scientists knew that, theoretically, they could distinguish explosions from background seismic activity by analyzing the different proportions of primary and secondary (so-called P- and S-waves) in the detected seismic signal. But how could they quantify the different quantities of these sine waves from the noisy squiggles scrawled on seismograms?

The solution turned out to not only work for nuclear-weapon test monitoring but also solved a huge number of seemingly unrelated problems. The Fast Fourier transform (FFT) is an astonishingly useful class of algorithms that optimizes the computation of discrete Fourier transforms. The math terminology sounds complex, but the basic principle behind Fourier transform is really simple. To determine if a given sine wave exists in a complex signal, we just need to multiply each point in the complex signal by the corresponding value in the sine wave. Then we compute the area under the resulting curve. If the area is greater than zero, that area quantifies the relative amplitude of the sine wave in the complex signal.

Although conceptually rather simple, the computational power to compute every possible partial in a sound is nontrivial, even for a computer. The answer came in the form of a Fast Fourier transform, which is basically an optimization of the Fourier transform algorithm, using techniques now common in other domains such as image compression, allowing a computationally complex process to be efficiently repeated many thousands of times.

The applications of FFTs go far beyond nuclear weapons test monitoring. Following the publication of Cooley–Tukey algorithm, a very similar algorithm was discovered scribbled in the unpublished papers of German mathematician Carl Friedrich Gauss, who, in 1805, considered using a similar technique to calculate the orbits of asteroids Pallas and Juno from incomplete observations.

FFTs have also formed the basis of virtually all sound spectrum analysis tools since the first commercially available FFT system became available in 1967. Initially envisioned as primarily a tool for tasks like industrial diagnostics and electronic warfare, in time the ability to capture a snapshot of a sound and examine its spectra has had a profound effect on artists' understanding of acoustics. Through spectral analysis, timbre becomes harmony and vice versa. Orchestration can be rooted in an analytical study of repeatable phenomena. And the ephemeral can be reconstructed from little more than a recording of waveforms.

When, in 2004, Jason Brown, a professor of mathematics at Dalhousie University, published a paper called "Mathematics, Physics and 'A Hard Day's Night," it was spectral analysis though FFT that allowed him to show that producer George Martin likely doubled part of The Beatles' famous opening chord on the piano, solving a mystery that has long puzzled musicians.

Spectral Music: Almost But Not Entirely Unlike Tea

In a footnote to his thesis on hearing timbre-harmony in spectral music, composer Christopher Gainey quotes Douglas Adams's The Hitchhiker's Guide to the Galaxy: the hapless protagonist Arthur Dent has found a machine that provides him with a drink that is invariably “almost, but not entirely, unlike tea.”

So it often is with spectral resynthesis.

“Resynthesis” is the attempt to reconstruct a recorded sound through first analyzing the spectra of the recorded sound and then playing back the component overtones at the correct amplitudes. The result is puzzling. Resynthesized sounds can sound very similar to the original, to the point that resynthesized speech is often comprehensible. But resynthesized sound is very obviously not the original sound. Rather like a compressed image file, resynthesized sounds capture the outline of a sound while leaving out a great deal of the detail present in the original. This happens because of limitations in the number of overtones resynthesized, limitations in the rate of change over time, or through lacking important noise spectra present in the original.

Today we more commonly think of resynthesis as involving synthesizers or software that synthesizes the components of the sound spectra directly, but it didn’t start out that way. It started out with acoustic instrumental music.

In the late 1960s and early 1970s, composers began to fuse technology and artistry. As spectral analysis software became increasingly available in research institutions, a new generation of composers began to use this technology to create sounds that drew inspiration from the physics of sound. Early interest was focused around two groups of artists: the l’Itinéraire group in France and a second group of composers in Germany associated with Cologne’s Feedback Studios.

Although Danish composer Per Nørgård's Voyage into the Golden Screen from 1968 is commonly cited as the earliest spectral work, the defining work of spectralism from the 1970s is probably Gérard Grisey’s Les espaces acoustiques series. A student of composers Olivier Messiaen, Karlheinz Stockhausen, Iannis Xenakis and György Ligeti, Gérard Grisey was also keenly interested in acoustics, which he studied with French physicist and acoustician Émile Leipp and later at the Parisian music research institute IRCAM.

Les espaces acoustiques are a series of instrumental works that mimic sounds with groups of instruments acoustically resynthesizing spectra. The third movement, Partiels, scored for a chamber orchestra of 18 musicians, is based on the sound spectra of a trombone playing a low E. The work begins with the trombone itself playing the fundamental. Gradually strings and woodwinds fill in the overtones of the trombone, creating a ghostly simulacrum of a trombone. As higher partials are gradually added, the spectra reveals currents and eddies that gradually bring the listeners’ attention to hidden harmonies within the spectra of the trombone.

Largely lacking in melody, Les espaces acoustiques might sound very alien to the unaccustomed listener. Grisey himself disliked the term spectralism and instead preferred the term “liminal” for this approach to composition, emphasizing that the result sits somewhere between various sonic possibilities. It is somehow both harmony and timbre. We hear the chamber orchestra as a representation of the tone of the trombone, yet it’s not a carbon copy. It’s an extension of that tone, which gradually reveals new ways to listen to that timbre and thereby also plays on psychoacoustics.

While Les espaces acoustiques represents an austere and unalloyed approach to spectralism, by the 1980s, spectralism had expanded to encompass a wider range of compositional techniques and influences. A classmate of Gérard Grisey and fellow spectralist Michaël Lévinas, Tristan Murail brought a unique perspective to the movement. In particular, Murail's approach to spectralism was deeply influenced by his foundation in electroacoustic music.

A major landmark in late-20th-century music, Murail’s Gondwana (1980) is a titanic orchestral work which vacillates between chords derived from trombone spectra (again) and inharmonic bell-like spectra, created through frequency modulation (FM) synthesis. FM synthesis is now a mainstay of synthesizer music, where it’s frequently used to create rich and often clangorous tones by multiplying two or more waveforms. But in the 1980s, FM synthesis was still a relatively new technique. The mathematics of FM synthesis had recently been defined in a paper by John Chowning in 1967. In Gondwana, rather than performing FM synthesis in a computer or analog synthesizer, Murail applied the mathematics of FM synthesis as an algorithmic method of generating sound spectra. The tones of these spectra are modulated “on paper” by way of the FM modulation formula published by Chowning, and the spectra were then scored as harmony for the instruments of the orchestra.

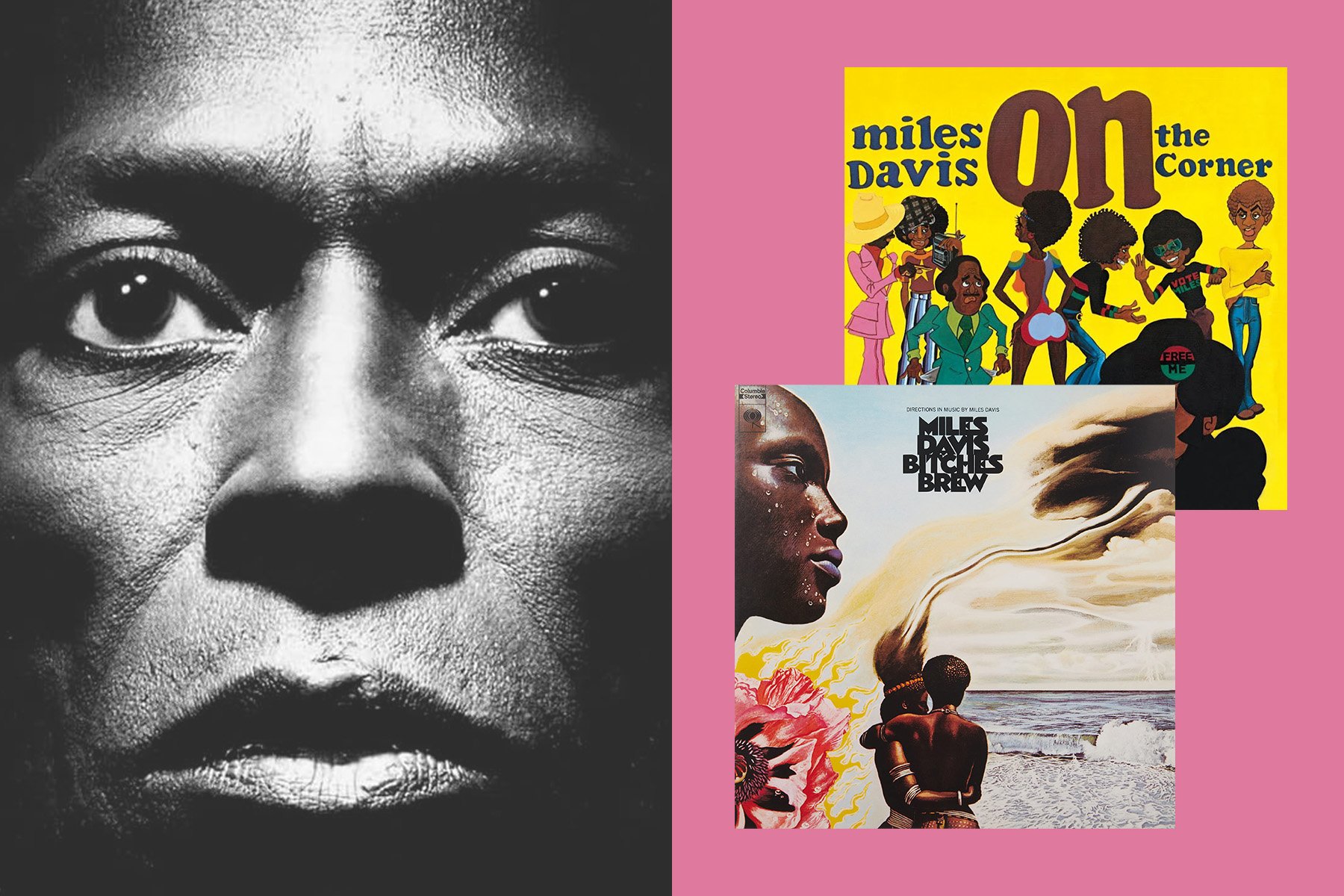

North American spectralism, while drawing inspiration from the same core principles of sound analysis and reconstruction as its European counterparts, has carved out a unique niche that is influenced by both technological advancements and the continent's rich tapestry of experimental traditions.

One of the pivotal figures in North American spectralism is James Tenney. His work in the 1960s at Bell Labs, a hub for technological innovation, situated him at the convergence of pioneering technology and musical exploration. It was here that he was introduced to the Shepard-tone phenomenon. This concept, named after cognitive psychologist Roger Shepard, encapsulates an auditory illusion where a series of tones seem to be infinitely ascending or descending in pitch. Tenney's electro-acoustic piece For Ann (rising) is a direct manifestation of this concept, which he later orchestrated in For 12 Strings (rising) (1971).

Tenney's exploration went beyond just sound analysis; he was deeply involved in the phenomenology of sound, understanding how listeners perceive and interpret sonic elements. His Postal Pieces, for example (each a small score intended to be printed on a postcard) emphasized gradual unidirectional processes, encouraging listeners to actively "hear-out" partials which often traced a particular harmonic series. The use of indeterminacy, where certain musical elements are left to chance, was another hallmark of his compositions. This was heavily influenced by John Cage, a pioneer of indeterminacy in music. In Tenney's Clang (1972), there's a controlled randomness, where players choose from available pitches, giving the piece a texture that's both structured and fluid.

Another distinguishing feature of North American spectralism is its embrace of electroacoustic music. While European spectralists like Grisey and Murail often utilized orchestras to emulate and reconstruct spectra, American composers—influenced by the rise of electronic music studios and research institutions in the U.S., such as the San Francisco Tape Music Center and the Center for Contemporary Music at Mills College—showed a greater proclivity towards electronic media. As early as Symphony (1975), James Tenny was experimenting with combining tape delays with large-scale spectrally-influenced instrumental works, a medium he would explore extensively throughout the following decades.

While spectralism is often described as a historical movement, its leading proponents largely resisted this classification, preferring to describe spectralism as a compositional technique or aesthetic. In this sense spectralism continues today, although its influence is more varied and has extended to include perhaps an even greater influence among electro acoustic artists.

Synthesis and Software Tools

The first software tools for spectral music in the 1980s were quite limited. Composers at the Parisian music research institute IRCAM had to share the use of the institute’s single massive computer. Fast Fourier transforms are computationally intensive algorithms—particularly when extended into the time domain—so early spectral compositions tended to use either stock spectral analyses, which could be obtained from the scientific literature, or single snapshots of a sound in time. Tristan Murail, recognizing the need for better tools, pioneered several bespoke software programs tailored for spectral calculations on his personal microcomputer.

A few experiments with spectralism-oriented techniques were incorporated into early commercial synthesizers. The Synclavier was an early digital synthesizer manufactured in Norwich, Vermont by the New England Digital Corporation, developed initially in collaboration with Jon Appleton, a professor of Electronic Music at nearby Dartmouth College. The Synclavier II, released in 1980, incorporated audio analysis and resynthesis features via an early graphical terminal and could resynthesize up to 128 harmonics, which it could also crossfade across up to 300 “timbre frames.”

Although popular with some of the most famous musicians of the 1980s (its fans included Michael Jackson, Paul Simon, Frank Zappa, Laurie Anderson, and Chick Corea), the Synclavier II and associated software packages cost a small fortune and were primarily acquired by universities, record labels, and Hollywood production companies.

Although not a competitor to the multi-algorithmic Synclaver II, 1987 saw the introduction of another experiment with a hardware re-synthesizer in the form of the Technos Acxel, an invention of Québécois company Technos. Decades ahead of its time, the Technos Acxel not only used several clever algorithms to achieve dynamic and sophisticated resynthesis, it also included a tablet-computer-like control interface. By drawing on this “tablet” with the finger, the user could shape the resynthesized sound spectrum by changing spectral amplitudes, represented by a matrix of 2000 LED-enabled capacitive touch plates.

The first publicly available computer-assisted composition software designed specifically for spectral analysis was Esquisse, released in 1990, which was the first of several libraries and programming environments built by IRCAM researchers. It was followed by PatchWork, which was written in Common Lisp on the Macintosh. As the name suggests, PatchWork pioneered the now familiar patch-cord-style graphical user interface now used by Max/MSP and Pure Data, as it attempted to make computer-assisted composition accessible to composers with little-to-no knowledge of computer programming. Finally, in 1996 IRCAM added Open Music, a superset of PatchWork, which added a more sophisticated visual design and better support for the temporal domain. Like its predecessor, Open Music retains a Lisp-like syntax for music scripting, which continues an odd historical anomaly to the present day, continuing the use of a legendary programming language which has now all but disappeared from industry.

Building on the heritage of tools such as Esquisse, PatchWork, and Open Music, the genesis of Max, and later its extension MSP, marked a watershed moment in electronic and computer music. Miller Puckette, originally at IRCAM and later at the University of California, San Diego, designed Max in the mid-1980s to provide a more intuitive interface for composers and musicians to experiment with sound in real-time.

Like other IRCAM software, for many artists the primary advantage of Max was its visual programming paradigm, allowing users to "patch" together different elements without needing to code in a conventional sense. However, unlike Open Music, Max/MSP—alongside its open-source counterpart, Pure Data—captured the interest of an expansive and eclectic community, encompassing both seasoned professionals and passionate hobbyists. One of the standout features of both platforms was the flexibility they offered programmers, allowing them to craft their own modules or "externals." This capability greatly broadened the potential of Max/MSP, allowing customizations and extensions.

Spectralism also became more accessible on personal computers and started to become an effect available in digital audio workstations (DAWs) through stand-alone software and VSTs (audio plugins commonly available either bundled with or aftermarket for a DAW). Tom Erbe (of current Make Noise Echophon/Erbe-Verb/Telharmonic/Morphagene/Spectraphon fame) released the first version of his software suite SoundHack in 1991. With a quirky and colorful user interface, SoundHack was a pioneering audio mangling plugin, probably the first tool to make this esoteric technique accessible on the home computer. Of particular interest, SoundHack included various mutation functions for convolving several audio spectra together, a weird cross modulation effect that apparently grew out of experiments with composer Larry Polansky.

By 2007 the Center for New Music & Audio Technology (CNMAT) at University of California at Berkeley offered an extensive set of free spectral plugins for use in Max/MSP which eventually included an FFT-based spectral analysis object by Tristan Jehan based on Miller Puckette’s fiddle~ pitch-tracking object, developed in the mid-1990s.

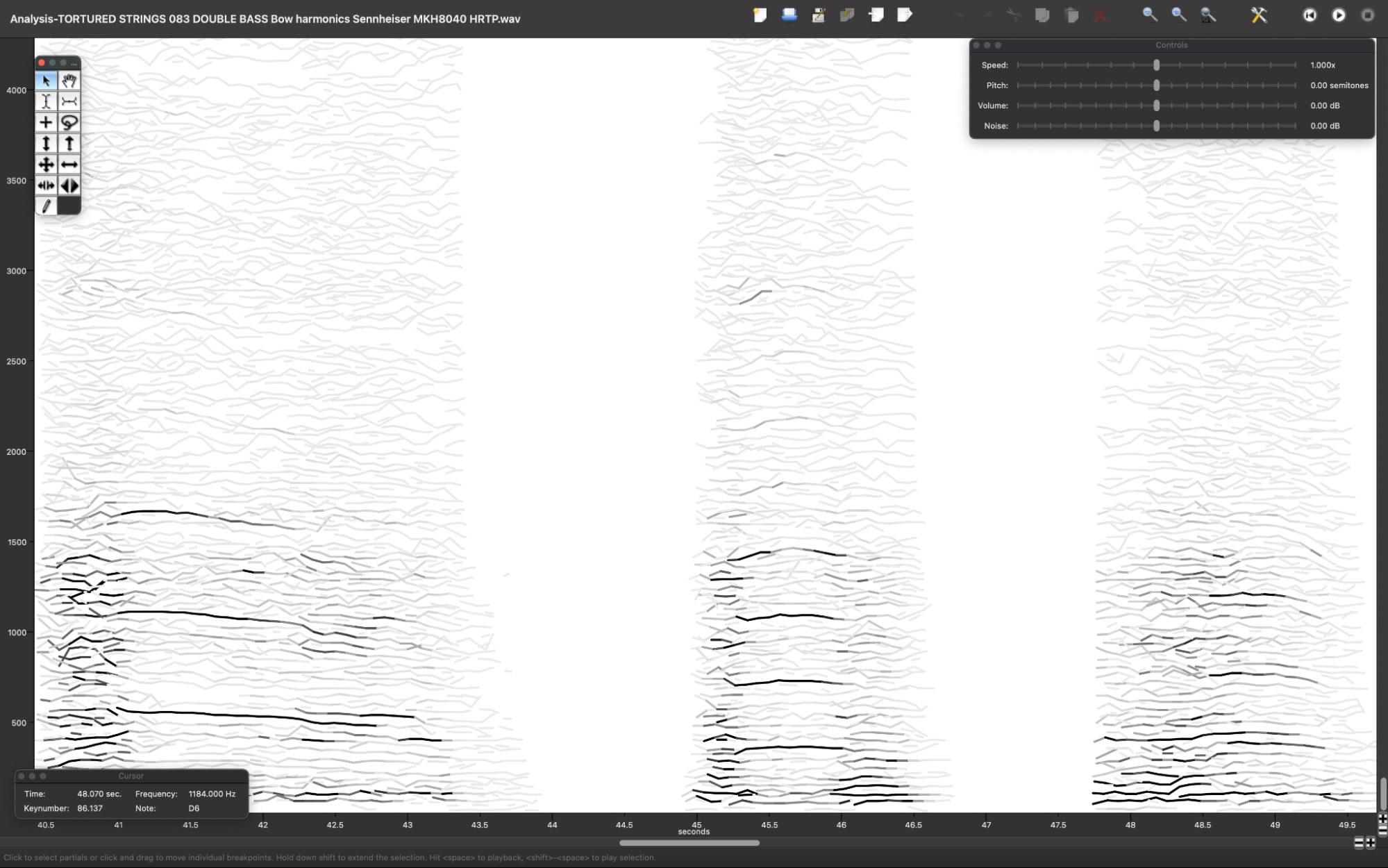

[Above: Double Bass resynthesis in Michael Klingbeil’s SPEAR software.]

At around the same time, in 2006, composer Michael Klingbeil released the first version of SPEAR, an audio analysis and resynthesis software tool which grew out of a doctoral dissertation at Columbia University. SPEAR, which is still maintained and is now available as a 64-bit application for Intel or Apple silicon and macOS 10.10 or higher, has a uniquely approachable interface which allows for direct editing of resynthesized partials and supports several data-export formats.

In recent decades spectralism has also had a much greater impact through VSTs and other audio processing plugins. Of particular note, New Zealand-based composer and programmer Michael Norris’s freeware Soundmagic Spectral plugins have had an unparalleled influence. The package offers twenty-four real-time spectral processing plug-ins. With tantalizing names like “Spectral Emergence” and “Spectral Tracing,” each plugin performs a different computational manipulation of a sounds spectrum via FFT, transforming almost any sound into velvety sonic mist or extraterrestrial vocal shards. Rumored to be in the arsenal of iconic artists such as Brian Eno and Aphex Twin, and lauded in interviews by Travis Stewart aka Machinedrum, the uniqueness of Soundmagic Spectral lies in its unparalleled effects and the extensive customizability of its parameters.

Spectral Modular

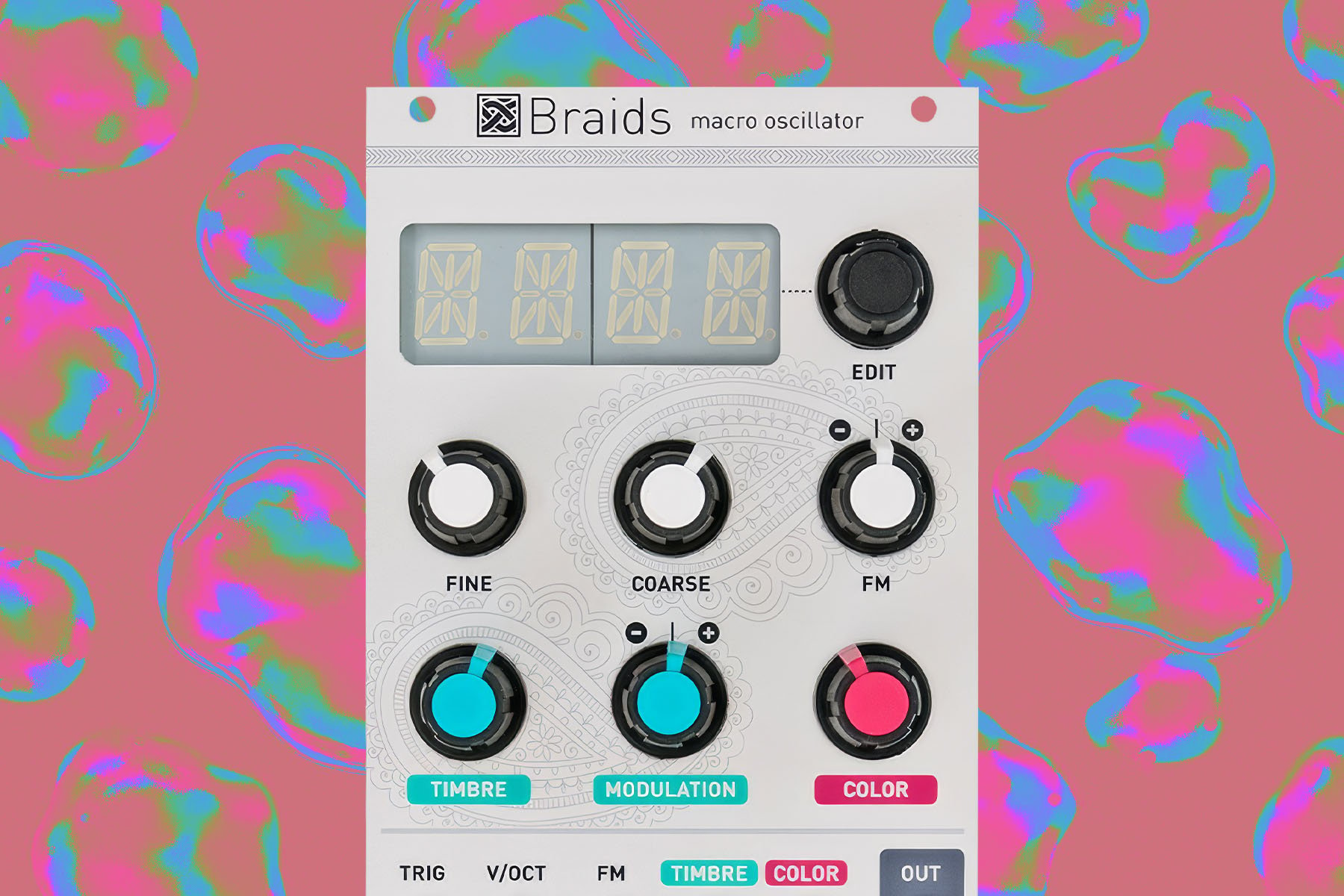

With recent popularity of modular synthesis, spectral techniques have begun to find their way into modular setups, allowing musicians to manipulate and explore sound spectra in real-time. This blend of spectral processing and modular environments has paved the way for the development of innovative hardware units dedicated to spectral transformations.

Modular is in some ways the natural next step for a technique that has found its raison d'être in physics, perception, and computational manipulation of sound. While classic modules like the Buchla Programmable Spectral Processor Model 296 used arrays of bandpass filters to manipulate sound spectra, it is only in the last few years that powerful microcontrollers have made real time FFT practical in a small format.

A simple application of spectral techniques in modular synthesis is found in pitch-tracking algorithms, which typically convert recorded or live audio into pitch information in the form of volt/octave control voltage. For this reason, in modular, this feature (which is normally implemented by tracking the fundamental through FFT) are sometimes referred to as pitch-to-voltage converters. The most common pitch-to-voltage option in Eurorack is likely the “Pitch and Envelope Tracker” mode (mode B-3) in the Expert Sleepers Disting MK4 and Super Disting EX Plus Alpha. However, pitch tracking for external audio is also available in the Sonicsmith ConVertor E1 Preamp and Audio Controlled Oscillator. And pitch tracking can also be done in a laptop via Max/MSP or another programming environment with control voltage brought into the rack with a DC-coupled interface such as the Expert Sleepers ES-8 or ES-9.

A different approach to spectralism in modular synthesizers has focused on spectral resynthesis. Two modules stand out in this category:

The Rossum Panharmonium Spectral Resynthesizer is a typically maximalist creation of Dave Rossum. A cofounder of E-mu Systems in 1972, along with Scott Wedge, Dave Rossum’s designs pioneered a number of instruments that were well ahead of their time, including very early experiments with microprocessor and a unique multidimensional interpolating digital filter, the Morpheus Z-Plane Synthesizer, which came out in 1993 (and was brought back for Eurorack in the form of the Morpheus).

The Panharmonium is built around a spectral analyzer that resynthesizes incoming audio with between 1 and 33 digital variable-waveform oscillators. Driven by an internal or external clock, the module is capable of live spectral resynthesis, and it can also freeze a single “snapshot” of a sound, allowing it to function like an additive synthesis oscillator.

It’s hard to precisely classify the Panharmonium. It can take on the role of either an oscillator or an effect, and more arcane uses (such as percussive effects) are possible as well. Departing from the basics of a re-synthesizer, Rossum has also added a number of features such as “Blur” and “Glide” which allows spectra to hang over into subsequent frames or interpolate between partials. If you squint, the Panharmonium might almost sound like a kind of spectral reverb, capable of instantly transforming even simple sounds into a world of ambient pads and drones. But its many other potential uses are perhaps underexplored and underappreciated.

Another module which recently rekindled interest in spectralism is the Make Noise Soundhack Spectraphon, which puts a distinctive Make Noise twist on re-synthesis. In contrast to many other spectral instruments, the Spectraphon takes unique inspiration both from resynthesis and from classic Buchla instruments, most obviously from complex oscillators, a lineage of modules that trace their ancestry back to the Programmable Complex Wave Generator Model 259. A hallmark of what is now referred to as the "West Coast synthesis" philosophy, complex oscillators are typically two oscillators linked through some kind of modulation, and typically include a built-in timbral stage with wave folding or other effects.

Built in collaboration with Tom Erbe of Soundhack fame, the module offers two primary operational modes: Spectral Amplitude Modulation (SAM) and Spectral Array Oscillation (SAO). In SAM mode, it meticulously analyzes audio inputs, making it possible to overlay an overtone structure onto the oscillators’ output. Conversely, SAO mode lets the user play with saved “Arrays” of sound spectra, allowing for a time-stretched or even reversed playback of external audio.

What truly sets the Spectraphon apart is its inherent adaptability and multifunctionality. While resynthesis remains the focus, Make Noise has discerned that the true essence of a vibrant electronic instrument is often found in modulation. To this end, Spectraphon is equipped with a central modulation bus whose modulation is implemented directly within the Spectraphon algorithm, which allows for clean modulation even at extreme settings and makes possible simultaneous bi-direction modulation between the two oscillators without artifacts that would be the result of an analog modulation bus.

Like some other spectral processors, the Spectraphon can divide its output into separate, spectrally interleaved layers—offering distinct outputs for odd and even overtones. Each side of the module features a “Partials” knob to balance between low and high overtones (often referred to as "spectral tilt"), and a "Focus" knob that isolates a specific range of harmonics from the module's memory. Together, these controls provide a sophisticated level of spectral manipulation which sits on top of the resynthesis layer and provides access to spectral manipulations beyond mere analysis.

Since its initial introduction, Make Noise and Tom Erbe have added to additional modes of operation to Spectraphon, enabling its use as a network of chaotic oscillators, a complex noise generator, and more. What's particularly notable about Spectraphon is that, instead of using spectralism as a mere novelty, the module assimilates the technique in a way which might be compared to other established synthesis methods like wavetable synthesis. The emphasis is not just on the technique itself, but also on the comprehensive control and modulation of the instrument in its entirety.

Conclusion

Spectralism, although rooted in specific historical moments, transcends its inception. Many of its proponents view it not merely as a passing curiosity, but as an enduring style and aesthetic. This philosophy of drawing inspiration from a scientific understanding of sound itself is not confined to the past but is vividly present and ever-evolving. With the infusion of spectral techniques into modern modular synthesizers, we are reminded that the spectral journey is not just historical. It continues today.

Spectralism, despite its rich theoretical underpinnings and profound impact on contemporary music, is still relatively nascent as a technique. Its evolution from academic computer music environments to more accessible digital audio workstations, and now to modular synthesizers, illustrates the profound potential yet to be unearthed. The integration of spectral techniques into the world of modular synthesis, an arena known for its experimental nature, underscores the vast untapped possibilities and nuances that spectralism holds.

Recent innovations, particularly with the design of microcontrollers in the modular synthesizer domain, are indicative of just the tip of the iceberg. Modules like the Rossum Panharmonium and the Make Noise Spectraphon not only bring spectral techniques into the tactile realm but also suggest that there are layers of complexity and artistry yet to be discovered. Some undoubtedly are waiting on further technical innovations. But the continued interest in this approach to sound manipulation demonstrates that the boundaries of spectralism are far from being fully defined or understood.