Sound, like any complex phenomenon, becomes more richly understood when viewed through a prism of perspectives. Colloquially, we recognize it as an auditory sensation produced by vibrating objects; yet, as many of our readers might readily acknowledge, there is far more to sound than meets the… well, ear. Crucially, whose ear are we talking about—a human’s, a dog’s, a bat’s? Furthermore, is an ear even necessary for experiencing sound? An earthworm lacks ears yet readily responds to the vibrations of falling raindrops or, mimicking the effect, rhythmic rubbing of ridged wood blocks.

So, what is the experience of sound, really, and why is it so foundational to life? What is the nature of the relationship between experiencing a sound, and being able to interpret it? What makes it so unique among information media, and how do sound waves encode and carry layers of meaning across space, time, and even cultural or biological barriers?

These questions are at the heart of this article as I explore sound and a wide variety of its expressions as a form of coded communication. Examining sound through this lens inspires us to notice and reflect upon the significance hidden within even the most unassuming chirps. There is also practical value here: understanding the information encoded within sounds deepens our comprehension of the world around us.

To illustrate this, I will draw from a few not obviously connected threads: the history of information technology, sound’s essential role within it, and its growing influence on contemporary bioacoustic research. We will begin by reframing sound through the lenses of information and code, and finally, bring it all together by discussing how artificial intelligence technology is transforming our understanding of animal communication. Let us first address the big question—what is information, and what does sound have to do with it?

Sound As Information

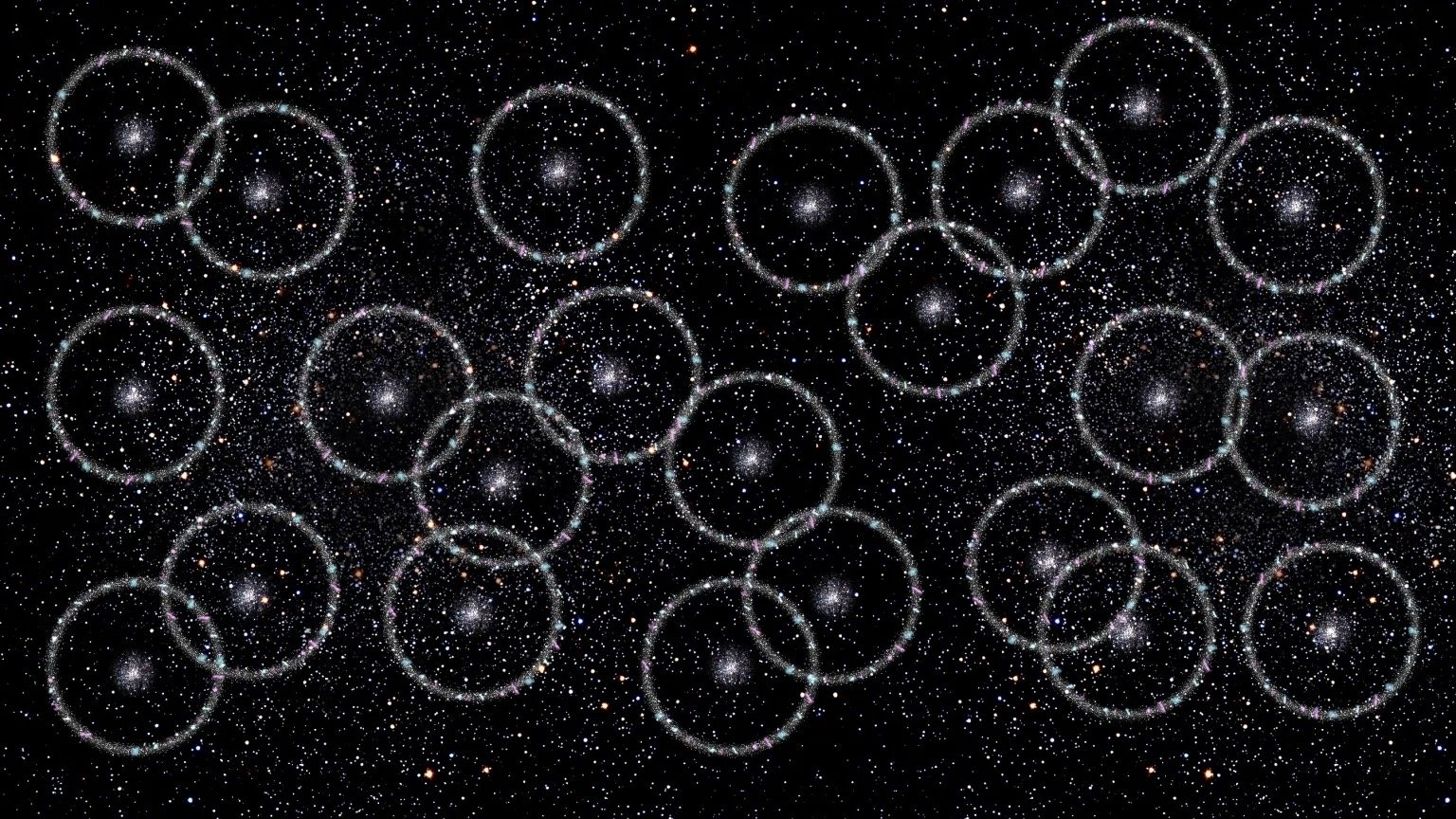

The image above shows galaxies arranged in a spherical pattern reflecting baryon acoustic oscillations—oscillatory features from the early Universe. Previously observed only statistically, they've now been directly identified in a single structure: Ho`oleilana. (Image Credit: Gabriela Secara, Perimeter Institute)

Have you ever wondered how ancient the phenomenon of sound is? History has started with writing, but sound did emerge at the earliest moments of our birthing world. As far as we know, long before there was any organism with sensory capacity and conscious experience to perceive the vibrations of sound waves, the universe was already buzzing, booming, droning, and crackling. Let’s bring this closer into perspective.

Approximately 13.8 billion years ago, right after the Big Bang, our universe was in a state of hot plasma. As it began cooling and expanding, protons and neutrons (collectively known as baryon particles) started to form, which immediately began interacting with photons (electromagnetic radiation particles), creating immense outward pressure. In the overdense regions, where matter clumped together with gravity, the friction between pressure and gravity generated vibrations that scientists call the baryon acoustic oscillations (BAO)—effectively the first sound waves.

These original waves rippled outward through the cosmos in spherical shells, carrying baryons and photons at immense speeds (about half the speed of light). As the universe continued to expand and cool, photons decoupled from matter, the pressure driving the waves dissipated, and the sound waves “froze” in place. Scientists called the faint shells of baryonic matter that remained the sound horizon, representing the maximum distance those first waves traveled before decoupling.

Over billions of years, the denser regions left by these frozen waves became gravitational focal points, fostering the formation of stars and galaxies. The ripples left by the sound waves influenced the large-scale structure of the universe, evident in galaxies subtly tending to cluster at distances corresponding to the expanded radius of the original ripples. This cosmic pattern, stretched further by the dark energy accelerated expansion of the universe, laid the foundation for the cosmic structures we see today. In essence, these early sound waves encoded the universe’s first “blueprint,” revealing critical information about the primordial plasma’s density, gravitational forces, and the interaction between matter and radiation.

Here lies the crux of our discussion: these early cosmic sound waves illuminate a fundamental aspect of sound itself—its unique ability to carry information. The oscillations of these waves weren’t arbitrary; they encoded specific details about the conditions of the early universe, allowing scientists billions of years later to reconstruct the cosmic past. Importantly, this concept scales all the way down to everyday sound as well.

Now, let’s take a moment to consider an important question: what is information? Given how frequently we use the word in our daily lives, the question may seem deceptively simple at first—after all, you know information when you encounter it. However, as it was noted in history, we often confuse information with the form it takes. For instance, when we hear words spoken aloud, see text on a page, or interpret the melody of a song, we may mistake the medium (sound waves, ink, or musical notes) for the information itself. Claude Shannon, a renowned mathematician and inventor, proposed that “information” can signify something far more fundamental: a measurable quantity, a “quark of communication.”

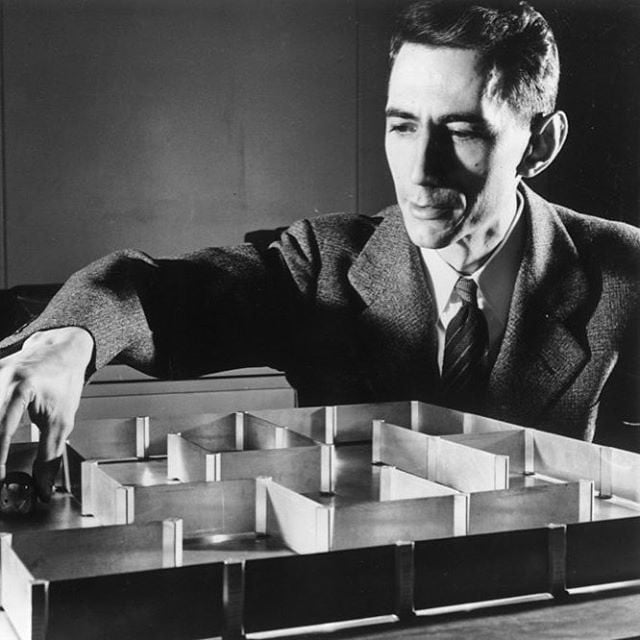

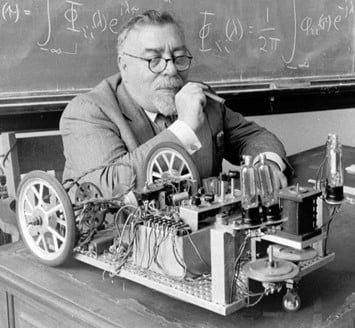

Claude Shannon and the maze he designed for cybernetic rats (Science, Vol. 333, 09/30/11)

Claude Shannon and the maze he designed for cybernetic rats (Science, Vol. 333, 09/30/11)

From covert cryptographic work at Bell Labs in wartime to the creation of a flame-throwing slide trombone, Claude Shannon’s life abounded in both intellectual rigor and playful invention. However, he is perhaps most widely known as the founding figure of information theory, communication technology, and by extension our modern digital world. In the mid-1930s, while in the graduate program of the Massachusetts Institute of Technology (MIT), Shannon was closely studying relay-based switching circuits at work, and—insightful as he was—saw a parallel between the behavior of these mechanisms and expressions in Boolean algebra, a branch of symbolic logic introduced by mathematician George Boole a century earlier. In Boolean algebra, you solve a problem by representing statements as variables—either true (1) or false (0)—and then combining these variables using logical operations such as AND, OR, and NOT. By following a clear set of algebraic rules, you can reduce or simplify complex expressions until you arrive at a logical outcome: a final value of 1 (true) or 0 (false). This abstraction eliminates the ambiguities of natural language, allowing complex reasoning to be broken into systematic, universally applicable rules. Shannon documented his findings in 1937 in his master’s thesis “A Symbolic Analysis of Relay and Switching Circuits.”

During World War II, Shannon returned to Bell Labs, where the pressing demands of wartime communication led him to apply his theories to top-secret cryptographic projects. It was during this period that his path had crossed with Alan Turing, another prodigal pioneer of computer science, whose work on machine intelligence profoundly resonated with Shannon’s ideas. While the two found each other’s work fascinating, their philosophical outlook on the nature of intelligence and computation didn’t always align. For example, Turing believed intelligence could be formally defined and mechanized using symbolic logic, while Shannon saw intelligence as an emergent, probabilistic process and was skeptical of rigid, rule-based approaches. Turing envisioned machines capable of thinking and reasoning; Shannon was more pragmatic, focused on making machines efficient in transmitting and processing information.

In the introduction to his definitive paper, “A Mathematical Theory of Communication” (1948), Shannon wrote:

“The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point.”

Shannon saw information as a quantifiable and measurable phenomenon. The mathematician’s core insight was that information can be measured by how much it reduces uncertainty—independent of the meaning or purpose of the message itself. He quantified this uncertainty using the familiar yet challenging scientific concept of entropy—a term native to thermodynamics, and typically associated with a state of disorder and randomness in a system. In the context of information theory, entropy is information, concluded Shannon. It increases and dissipates in accordance with the number of possible states a system can have. The more uncertainty there is, the higher the entropy, and thus the more information is needed to predict an outcome.

Shannon proposed that any piece of information can be narrowed down through a sequence of “yes-or-no” (binary) questions. Each question effectively cuts the number of possible outcomes in half, bringing the receiver closer to identifying the exact message. For example, if you imagine trying to guess a number between 1 and 16, you can ask, “Is it greater than 8?” to instantly divide the possibilities into two sets of equal size—making each step a single bit of information. In this way, Shannon showed that the total information content of a message is fundamentally linked to the minimum number of binary decisions required to specify it completely. This led him to define the bit, short for “binary digit,” as the basic unit of information, forming the bedrock of computer science.

As Shannon reframed information in this way, it gradually began to appear in everything, interlinking various fields—a symptom that may also be observed with such concepts as energy and intelligence (indeed, intelligence was another word Shannon considered before landing on information). A pertinent 1980s quote from biologist Richard Dawkins, cited in James Gleick’s “The Information: A History, a Theory, a Flood,” states:

“What lies at the heart of every living thing is not a fire, not warm breath, not a ‘spark of life,’ it is information, words, instructions.… If you want to understand life, don’t think about vibrant, throbbing gels and oozes, think about information technology.”

Sound—just as our universe’s ever-unfolding “origin story” suggests—is an extraordinarily rich medium for transmitting information. For one, it is deeply tied to the flow of time, requiring active interpretation of a message as it unfolds. Its physical vibrations create an immediate, visceral experience—low bass frequencies reverberate through your body, while a sudden loud noise can make you jump. Sound waves travel at fast speeds and can cover long distances. Moreover, a single sound can carry multiple streams of information simultaneously: one spoken sentence may convey linguistic meaning alongside emotional tone, cultural context, and acoustic clues about distance and space. Crucially, sound also transcends the purely symbolic, tapping into emotive depths of personal and collective significance. Whether in the powerful unison of chanting or the melody of music, audio stimuli can produce intense, sometimes universal reactions that purely visual media may struggle to evoke. Because sound can travel around obstacles and fill a space, it fosters a sense of shared presence; people can gather around a single source, bonding through a collective auditory moment. Together, these qualities make sound a potent force in shaping both factual and emotional dimensions of communication. Thus it is no wonder that sound has been central to communication across time and cultures.

Sound As Code

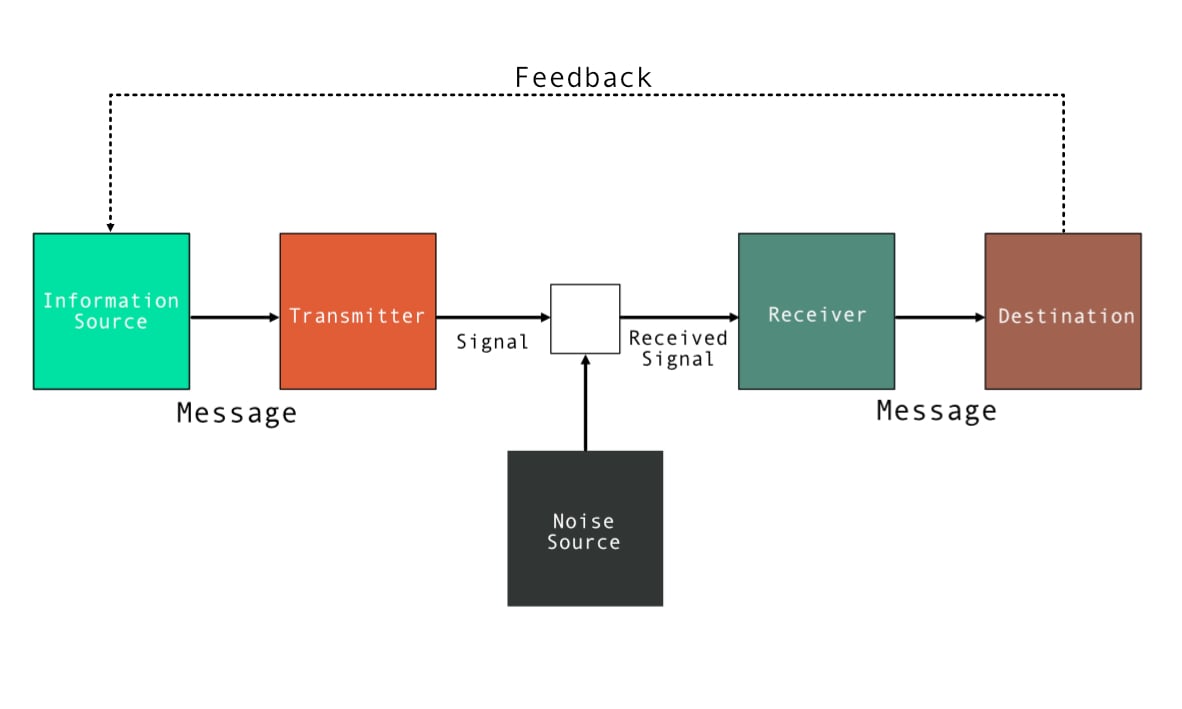

Above is a spectrogram showing in sequence: 1. sonification of DNA of SARS-COV-2 membrane glycoprotein; 2. "Hello World" message in Morse Code; 3. Humpback whale vocalization

What comes to mind when you hear the word “code”? If your first association is something hidden, you’re likely thinking of a “secret code,” which isn’t surprising given how the term gained prominence alongside the massive global conflicts of the past century. In a broader sense, a code is simply a system of rules or symbols used to represent information—linguistically, numerically, or even behaviorally—transforming data (words, ideas, sound, images) for clarity, efficiency, or compatibility. Some codes are indeed secret (covert communication, cryptography, steganography), while others strive for transparency but still need an informed interpreter. We see this in linguistic codes (slang, symbolic writing), artistic codes (musical notation, aesthetic styles), technological codes (programming languages, binary), biological codes (DNA), and even cultural codes, which manifest as unspoken rules that guide behavior across societies.

The process of converting something into code is called encoding; the process of restructuring data into a humanly understandable form is decoding. Take, for example, the language of symbolic logic that inspired Shannon and generations of other cryptographers: it provides a structured framework to represent and manipulate logical statements with mathematical precision. Symbolic logic illustrates how encoding ideas into a universal framework can make them interpretable across different contexts and systems. Similarly, sound can also act as a form of code, translating physical vibrations into carriers of meaning. Intentional sound-based systems like Morse code offer clear examples of encoding, but natural auditory systems—such as tonal shifts in language—hint at the organic evolution of sound as a robust medium for communication. What makes sound particularly compelling is its flexibility: it can be adapted to encode specific meanings while also carrying emotional, cultural, and environmental information.

Now, let's think about something even more familiar to us all: spoken language. Sounds are organized into defined structures that can be rearranged to communicate ideas. A written language encodes a specific set of sounds into a system of symbols. A note from centuries ago transports meaning across the bounds of time and space, as someone reading it recreates or imagines those sounds once encoded. But let’s not just think of human communication—what about sounds produced by other organisms? Are animals, and perhaps even plants, also “talking” to each other?

Fundamental as it is, acoustic communication is one of the most common and evolutionarily significant modes of interaction in the animal kingdom. An important feature of sound is its inherent inter-subjectivity: the same sound wave might hold meaning for one person or organism while being completely abstract—or even undetectable—to another. While I will focus on non-human sound communication in a later section, I would like to take a closer look at some fascinating examples of sound-based communication in humans. For this, I draw upon the interwoven histories of communication technologies and indigenous long-distance communication inventions, such as melodious whistled, and rhythmic drum languages.

Sound As Language: Drums and Whistles

The image above shows talking drum alongside "omele" from western part of Nigeria, West Africa (Image Credit: Bamidele S. Ajayi, Wikimedia Commons)

The use of abstract sound for synchronizing everyone in its acoustic vicinity to a concrete single message or event, in one form or another, appears in most cultures. Church bells, temple gongs, and amplified prayers from a mosque are symbolic sound languages of religions; horn and shell calls, or the terrifying roar of the ancient Aztec death whistles, are often associated with military action and inspire armies; digital bleeps of modern electronic devices aim to communicate a device’s status to the user. However, these expressions of sound only constitute signaling—they are typically one-directional, from a single source to many listeners. Sending a simple message through sound is highly effective in certain situations, but it is limited; thus, it is not really a code. There are, however, numerous examples of sound being used as the core of a coded system. One of the best and oldest examples of this are African talking drums.

While only “discovered” by Europeans in the 18th century, talking drums have been used by various cultures throughout the African continent for centuries, if not millennia. A variety of hourglass-shaped percussion instruments with bendable strings connected to a membrane used to change pitch, these drums blur the lines between music and communication. A common anecdote—retold by many missionaries, traders, and explorers traveling to the inner regions of West and Central Africa and thoroughly documented by John F. Carrington in “Talking Drums of Africa” (1949)—mentions Europeans arriving in villages unannounced, only to find themselves already expected and greeted by the locals. When asked how the villagers knew of their arrival, the usual answer was something along the lines of “Drums told us.” The sounds of the drums traveled distances of up to 20 miles, reaching destinations faster than any messenger could.

At first, dismissive of African culture as primitive, Europeans presumed that the drums were merely signaling simple messages, much like a war trumpet would. The reality couldn’t have been more different. By skillfully manipulating pitch, tone, and rhythm, drummers could mimic the subtlest intonations and melodies of spoken language, effectively sending detailed—and naturally poetic—messages across vast distances. The poetics of the drums had practical significance: the drummers wanted to ensure the message was delivered precisely, and so extended phrases provided context and reduced ambiguity, making the message clearer and more meaningful. A typical example: the simple message “Come back home” would be interpreted by the drummer as “Make your feet come back the way they went, make your legs come back the way they went, plant your feet and your legs below, in the village which belongs to us.”

Learning to “talk” drums was a challenging task. These extended messages were not random; specific patterns had to be studied and memorized before a drummer could improvise more complex messages by recombining a variety of rhythmic and tonal changes. However, once mastered, the drums could be used freely to express anything from prayers to jokes. What appeared merely as rhythms to unaware Europeans carried meaningful information to locals who understood the drum language. Mimicking the subtle pitch variations that are crucial to many African languages—most of which are predominantly tonal—further made deciphering the talking drum nearly impenetrable to Western ears not trained to detect such changes. John F. Carrington was reportedly the only European who fully understood, and even became fluent in, the talking drum language (specifically the Kele variation).

Although perhaps not immediately obvious, Carrington’s anthropological insights into talking drums mirror Shannon’s mathematical inquiries into information. While the latter quantified and measured how information is fundamentally about reducing uncertainty, Carrington demonstrated an ancient, culturally embedded method of encoding linguistic tones and rhythms onto drum patterns to convey intricate messages over vast distances. Here, it is not a sequence of binary data that ensures clarity of the message, but poetry and physicality of the sound waves.

While unique, talking drums are not the only example of language encoded into music-like structures. An equally fascinating and endangered phenomenon of whistled languages has been found across the world, having convergently evolved in various mountainous and forested cultures. Similar to talking drums, whistled languages mimic spoken ones. However they exist adjacent to both tonal and non-tonal languages. Non-tonal languages are whistled through formant patterns, while tonal languages rely on replicated melodies—both preserving the original structure of the native language.

For example, Sylbo—a whistled language native to San Sebastián de La Gomera in the Canary Islands—imitates the phonetic sounds of Spanish. Highly effective within the island’s spacious topography, it allows users to transmit messages up to seven miles. Kuşköy, or “Bird Language,” used in the mountainous villages of northern Türkiye, has served for long-distance conversations for over 300 years, transforming the entirety of Turkish vocabulary into distinct pitches and melodic lines. There are even more subtle examples—Chángxiào or transcendental whistling in ancient China, which carried spiritual connotations tied to Daoist philosophy and was seen as an exercise to connect with nature, communicate with animals, and engage with the ineffable realm of spirits.

The decline of naturally encoded sound languages of drums and whistles—which survived for hundreds or even thousands of years—was directly provoked by the rapid rise of electronic communication technologies. Carrington, with a sense of deep regret, observed firsthand how rhythmic communication systems were vanishing from the vocabulary of younger African generations. As outlined by Gleick in “The Information: A History, a Theory, a Flood,”

“Before long, there were people for whom the path of communications technology had leapt directly from the talking drum to the mobile phone, skipping over the intermediate stages.”

Telegraph and the Rhythm of Morse Code

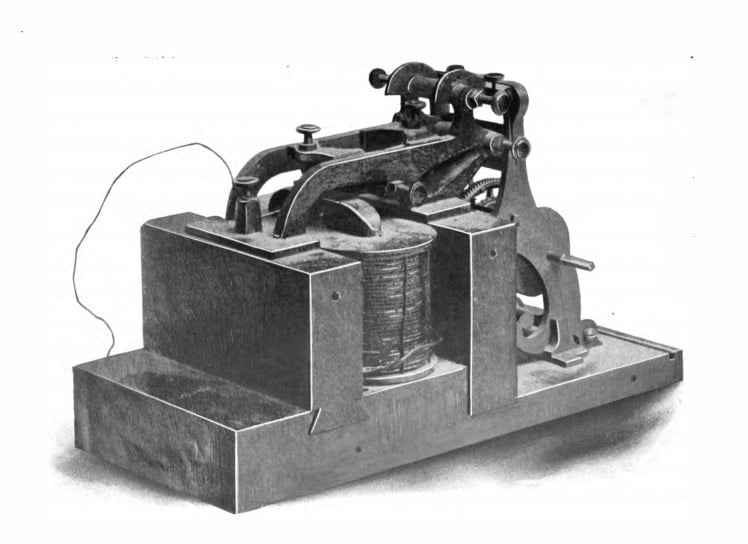

In the image above is a telegraph machine that received the first telgraph message in the United States. (Image credit: "Early History of the Electro-Magnetic Telegraph" by Alfred Vail via Princeton University)

By the dawn of the 20th century, the British Empire had undertaken an ambitious endeavor to weave a vast network of wireless telegraph stations across its colonies, cementing its grip on global communication. As technology advanced, long-distance communication evolved at an accelerating pace—first from the rhythmic pulses of telegraphy to the immediacy of telephony, and soon after to the boundless reach of radio. What began as scattered lines of connection soon expanded into a fully interwoven system linking nations and, eventually, the entire world. News, music, entertainment, and even mundane conversations started traveling faster and farther than ever before.

Morse Code, envisioned in the 1830s by American painter-turned-inventor Samuel F. B. Morse (later refined with crucial input from mechanical engineer Alfred Vail and physicist Joseph Henry), was a direct expression of these aspirations. At its core, the code was intended as a standardized system translating letters, numerals, and punctuation into short and long signals—commonly referred to as “dots” and “dashes,” or “dits” and “dahs.” The first message ever sent in Morse code was “What hath God wrought?”

-- .... .- - | .... .- - … | .... .- - .... | --. --- -.. | .-- .-. --- ..- --. .... - | ..--..

Suggested by Annie Ellsworth, the daughter of one of Morse’s friends, this line from the Bible was sent on May 24, 1844, from the Capitol Building in Washington to the railroad station in Baltimore via newly installed telegraph lines. In Morse’s first demonstrations, the mechanism comprised an electromagnet pulling a stylus against a strip of moving paper, marking out the code. This paper tape method proved cumbersome, as it demanded acute attention from the operator. Eventually, a shift to a more direct auditory approach was made: a telegraph “sounder,” whose armature struck against two distinct stops—often fashioned from different materials to produce clearer, contrasting clicks—thus sonified the code, letting operators learn it by ear.

The wired telegraph system of the 19th century was revolutionary for long-distance communication, though it did not allow for voice-based conversations, meaning only short messages could be effectively sent. Morse code was a convenient solution. The operator tapped out a rhythm on a telegraph key, turning the circuit on or off, while the receiver on the other end of the line listened to the pattern and wrote it out for decoding. Then, in the late 1800s, multiple inventors—Antonio Meucci, Johann Philipp Reis, Charles Bourseul, Elisha Gray, and most famously (in the U.S.) Alexander Graham Bell—independently, in one form or another, invented the telephone, which made it possible to transmit voice and other sounds over miles of wire.

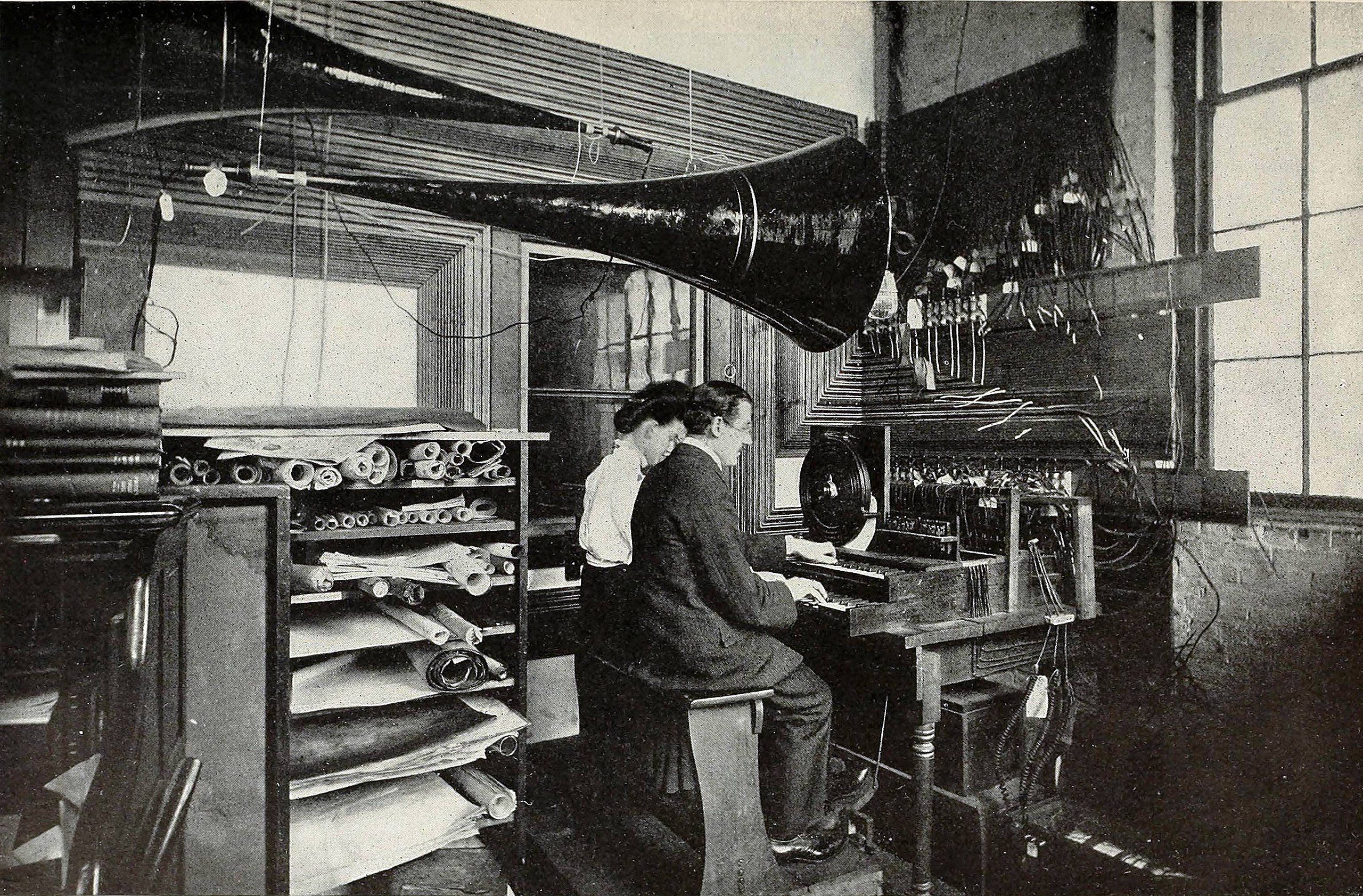

Above: Workshop console of the Telharmonium during its development.

Among the most ambitious projects to utilize this technology was the pioneering additive synthesizer Telharmonium, created by Thaddeus Cahill in 1897. Telharmonium was specifically designed to generate and transmit music electrically over telephone lines. It produced musical tones via large rotating dynamos—each crafted to produce a specific fundamental frequency. By spinning at a precise rate in the presence of electromagnets, these dynamos generated alternating currents matching musical pitches. Moreover, the additive system combined multiple harmonics in varying proportions to create a rich, organ-like sound. On the receiving end, people in hotels, restaurants, or even homes could “tune in” to the music via telephone receivers or specially designed speakers.

Telephony, like the telegraph but much more immediate, has largely been used for limited interpersonal communication. However, at the same historical moment, another type of technology was being developed that would, in the years to come, radically change the way information spread worldwide, sparking a revolution in mass media and communication.

Becoming Wireless: The Age of Radio and TV

Above: radio inventor Guglielmo Marconi

In 1873, James Clerk Maxwell predicted the existence of electromagnetic waves. Then, in 1883, Heinrich Hertz conducted the first experiments confirming their presence using a rudimentary spark-gap transmitter. In 1895, Guglielmo Marconi, inspired by Hertz’s experiments, used a similar transmitter to send Morse-code signals wirelessly. First, the system worked over short distances, but Marconi progressively managed to transmit messages far beyond that. By the first decade of the 20th century, Marconi had sent messages over the English Channel and eventually across the Atlantic Ocean. In those early years, this technology was referred to as radio telegraphy or wireless radio. His system laid the foundation for maritime communication to come, allowing ships to maintain contact in open waters, yet its broader influence was monumental.

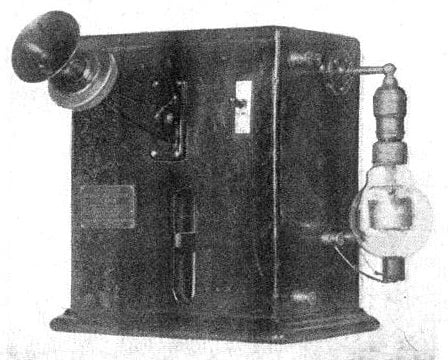

First vacuum tube AM radio transmitter, built in 1914 by Lee De Forest

First vacuum tube AM radio transmitter, built in 1914 by Lee De Forest

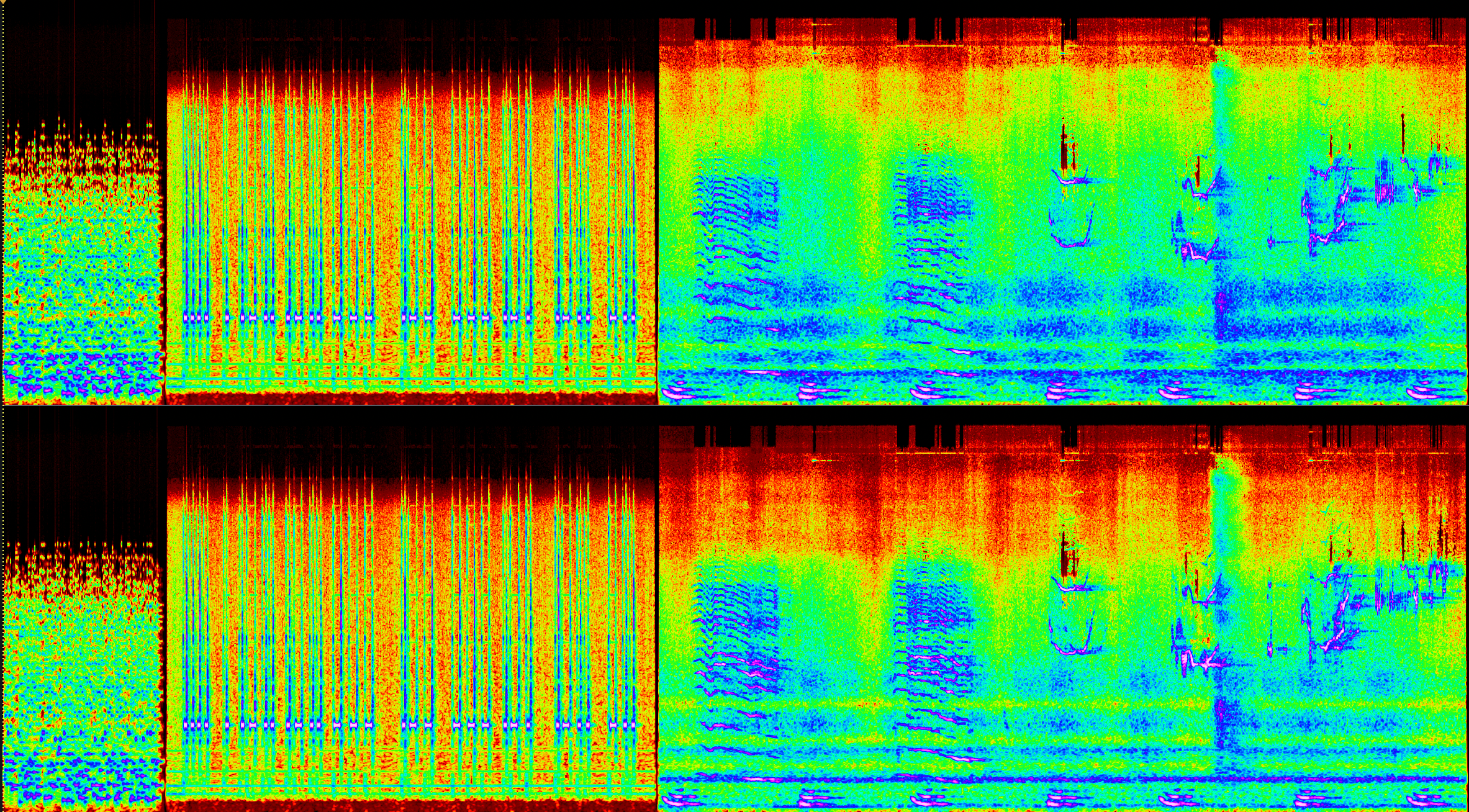

It is worth noting that radio itself is perhaps among the most haunting of communication media. Even its operating principles are astonishing when we pause to consider them. First, there is a transmitter, typically consisting of a high-frequency oscillator, an amplifier, and a source encoded onto the wave via frequency or amplitude modulation (FM or AM). Then, there is an antenna transforming electrical signals into ethereal electromagnetic waves. These waves ripple outward, bouncing off our planet’s ionosphere and sometimes bleeding into the cosmos. On the other side sits the receiver, potentially thousands of miles away, also featuring an antenna to detect these invisible waves, an amplifier, a frequency-selection circuit to tune to specific frequency bands, and a demodulator that extracts the encoded signal from the wave.

Although radio eventually gave way to television and later the internet, its concept and aesthetic—rooted in the interplay of signal and noise—retain a distinct enigmatic quality. Tuning across the electromagnetic spectrum, where signals emerge from a sea of static, gives it an aura of mystery and unpredictability, almost like conjuration.

This capacity for encoded meaning made radio an indispensable tool during times of conflict, particularly World War II, when it reached the height of its strategic significance. The BBC famously leveraged radio to deliver secret messages to resistance fighters across Nazi-occupied Europe, embedding them in otherwise ordinary news broadcasts. Sometimes random-sounding phrases were used, but occasionally, rhythmic or musical cues were included. One of the most famous examples took place on “Les Français parlent aux Français” (“The French Speak to the French”), where the widely familiar four-note motif of Beethoven’s Fifth Symphony (“tah-dah-dah-daaah”) would play, corresponding in Morse code (••• —) to the letter “V,” symbolizing victory.

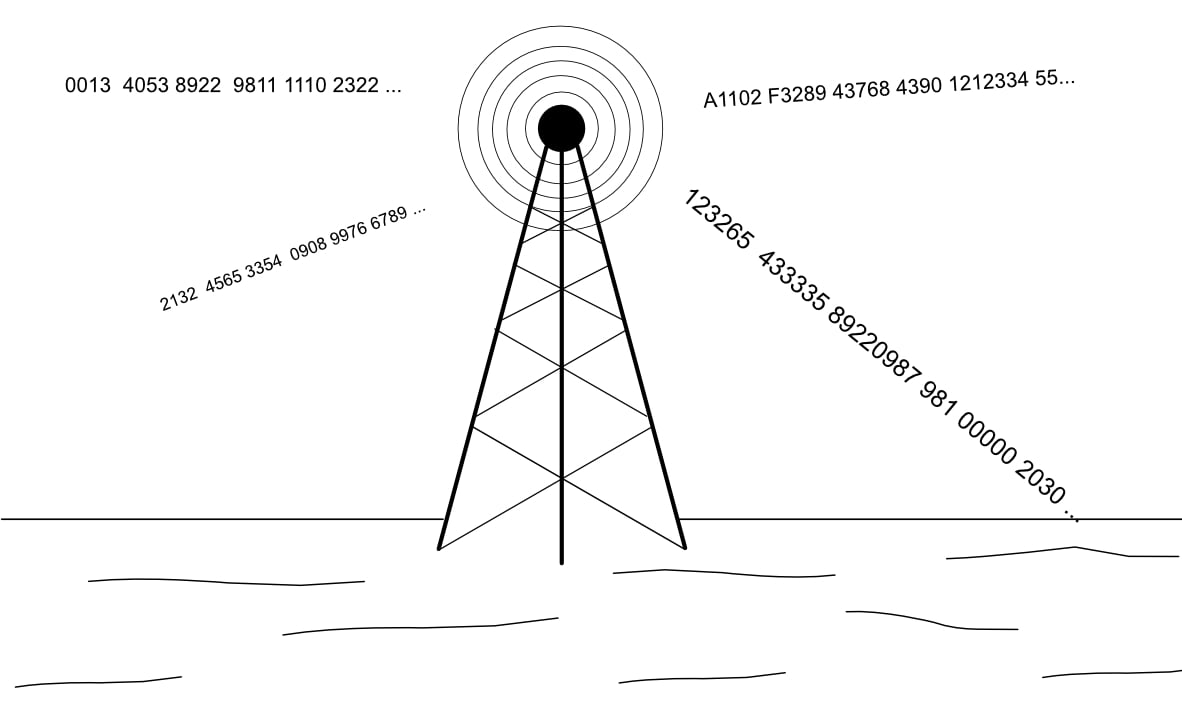

No expression of radio’s enigmatic power is as haunting or enduring as the Number Stations. These cryptic shortwave broadcasts consist of sequences of seemingly random numbers, letters, or coded phrases, often layered with peculiar tones, fragmented melodies, or eerie synthetic voices. Thought to have emerged in the early 20th century and reaching their peak during the Cold War, they are widely believed to be clandestine government transmissions designed to deliver encrypted instructions to undercover operatives. Despite the decline of traditional espionage and the rise of advanced digital encryption, some number stations still send their cryptic signals into the ether, captivating shortwave enthusiasts, conspiracy theorists, and intelligence analysts alike.

By the middle of the 20th century, television replaced radio as the primary source of news and entertainment. While sharing the same infrastructure and theoretical foundation as radio, television supplemented the sound with moving images, becoming a home staple that gathered all eyes in a single room. Occasionally, television has also been used to transmit coded messages. Some instances are subtle—for example, during the Soviet era, whenever some turmoil occurred in the country (like the death of a leader), Tchaikovsky’s “Swan Lake” would be broadcast repeatedly. Similarly, teletext was used in certain “TV number stations” to deliver encrypted instructions to spies. With the arrival of digital technology, the options for encryption only increased.

Becoming Digital: A Path to Artificial Intelligence

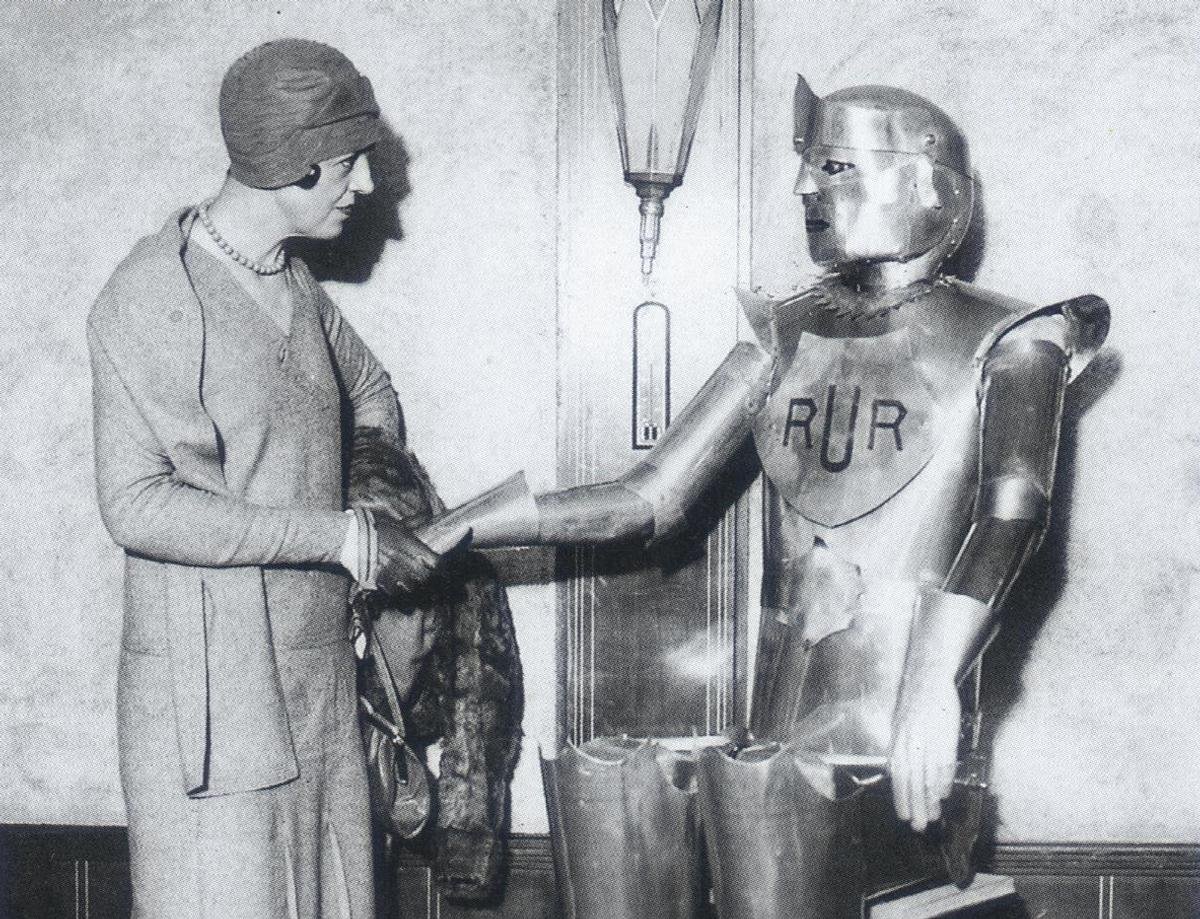

In the image above is UK's first robot Eric, greeted by Mrs. Jane Houston in the lounge of a New York hotel (Image source: theoldrobots.com)

Although Shannon’s theory of information initially met with excitement and curiosity, it also generated confusion and misunderstanding. The theory boggled minds, evoked mistrust, and sometimes even inspired jokes. The idea of treating “information” as a measurable quantity—independent of meaning—was counterintuitive for fields like linguistics, psychology, and traditional communication studies, where “information” was viewed as inherently tied to content or understanding. Anthropologist Margaret Mead was suspicious of excluding meaning from the definition of information. Norbert Wiener, the founder of cybernetics, saw many parallels between his own ideas and Shannon’s but expressed concern over ignoring the semantic and cultural dimensions of information.

Norbert Wiener

Norbert Wiener

Meaning, dynamical interactions, and feedback loops were central to cybernetics. Wiener’s view was that information has practical implications in biological and social systems where meaning and interpretation are crucial. Shannon’s omission of “meaning” was deliberate: his theory focused on the efficient transmission of signals over a noisy channel, where content is irrelevant to whether or not one can reliably transmit information. As the digital revolution unfolded over the next decades, these two perspectives turned out to be complementary. Shannon tackled the engineering problem of transmitting information, while Wiener focused on adaptive, dynamic systems such as human behavior—an essential viewpoint in developing fields like artificial intelligence, robotics, and human-computer interaction. Both envisioned, albeit in different ways, a future where machines would be deeply interconnected with humans, possibly even resembling us.

From automata creatures in ancient myths to Charles Babbage’s and Ada Lovelace’s designs of the first mechanical computation engines and their introduction of programmable machines; from Cartesian concepts of “biological machines” to Čapek’s coining of the word “robot”; from Homer Dudley’s operator-controlled voice synthesizer Voder to today’s autonomic and realistic AI voice assistants; and from Alan Turing’s Imitation Game (Turing Test) to ChatGPT—artificial intelligence seems both an unlikely and an almost inevitable outcome of human cultural evolution. Resulting from centuries of human imagination, study, philosophy, inspiration, and experimentation, artificial intelligence is a testament to our unending curiosity about the nature of life.

Over the course of history, many terms have been used to describe what Alan Turing famously called “thinking machines”—automaton, mechanical brain, cyborg, machine intelligence, electronic brain. All these words suggested a machine-human hybrid, but the term that stuck was artificial intelligence. It was coined in 1956 at the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Claude Shannon, and Nathaniel Rochester. McCarthy wrote in the proposal:

“The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it. An attempt will be made to find how to make machines use language, form abstractions and concepts, solve the kinds of problems now reserved for humans, and improve themselves.”

The Dartmouth Conference launched AI as a legitimate field of inquiry. It spurred the creation of early pioneer groups such as the Stanford AI Lab (founded by McCarthy) and the MIT AI Lab (founded by Minsky). With ambitious objectives, its founders both underestimated the complexity of simulating intelligence and overestimated the capabilities of early AI programs—leading to inflated expectations. They believed truly “smart” machines would be relatively straightforward to build, and this mismatch between ambition and feasibility kept AI on the fringes of technology for many years. Ultimately, AI re-emerged—not in the form of those early science-fiction dreams, but as a pivotal force shaping the modern era.

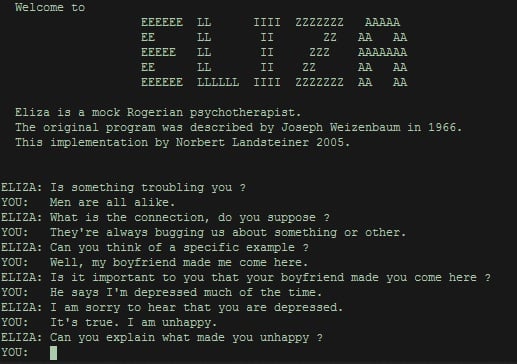

ELIZA, developed by Joseph Weizenbaum at MIT between 1964 and 1967, was among the earliest practical applications of natural language processing, revealing much about human-machine interaction. Using pattern matching and substitution techniques, the program—lacking any real cognitive grasp—could give users the illusion of understanding. Its most famous script, known as the “DOCTOR” script, mimicked a psychotherapist using a Rogerian style, reflecting the user’s input back to them in a way that prompted engaging, introspective conversations.

The insights gleaned from ELIZA were just as significant as the technical achievement. Weizenbaum’s project revealed not only the promise of computer-assisted communication but also the limitations of such early AI systems. Moreover, many users became surprisingly emotionally attached to ELIZA, stirring ethical and philosophical questions about the future of human-machine relationships. Weizenbaum himself later cautioned against overstating the capabilities of these programs, emphasizing that superficial mimicry should not be mistaken for genuine intelligence.

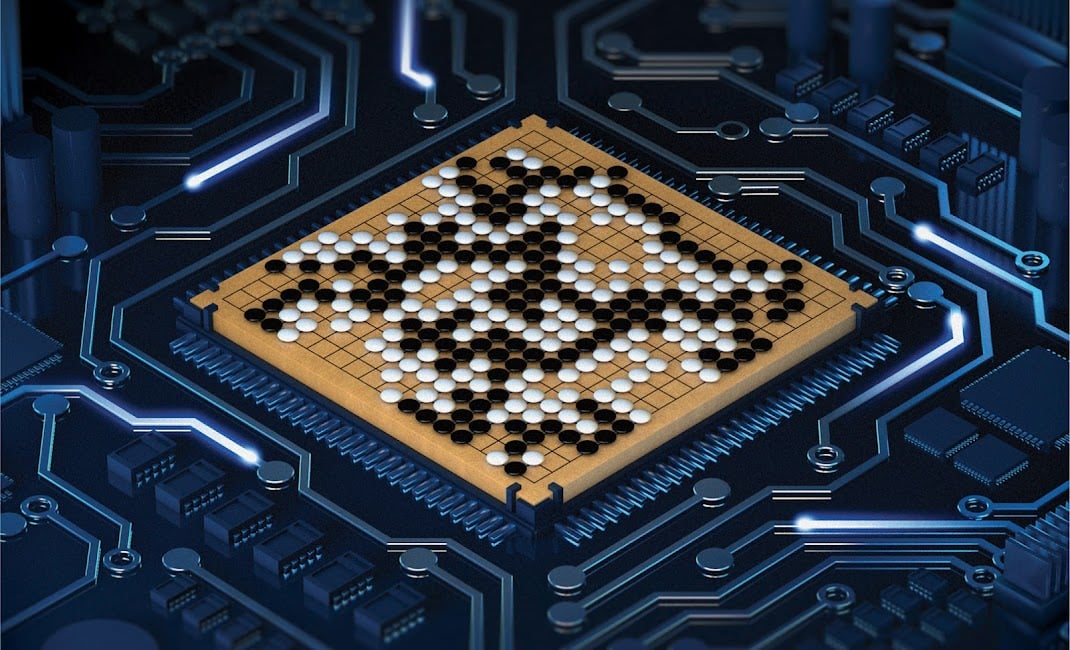

A lot has changed since ELIZA. In the past decade, AI has moved from theoretical speculation to an undeniable reality in everyday life. Breakthroughs in deep learning, neural networks, and computational power have propelled AI into industries ranging from healthcare to finance, creativity, and communication. A defining moment came in 2016, when DeepMind’s AlphaGo defeated world champion Lee Sedol, demonstrating a kind of machine intuition that transcended brute-force computation. Soon after, AlphaZero mastered multiple games without using any human-generated data. Generative AI models like Stable Diffusion and DALL·E have unleashed unprecedented creativity, producing stunning visual art from mere text prompts. The biggest shift arrived with large language models like ChatGPT, Claude, DeepSeek, and others, showcasing an astonishing capacity to understand and generate human language, redefining how we interact with technology. AI systems now assist in scientific research, medicine, law, and education, continuously refining themselves through adaptive learning—all while igniting debates on ethics, safety, and security.

So here we are, moving through a world increasingly co-populated by AI agents. What does that mean for us, for the environment? Predictions in popular culture remain contradictory. Will AI solve our problems, making life easier? Will it wreak havoc on humanity, increasing suffering if misused by authoritarian governments, greedy corporations, or malicious actors? Or will it simply fail in some catastrophic error? Will we face a mischievous machine like HAL 9000 from 2001: A Space Odyssey or a hostile superintelligence like Skynet from The Terminator? Or will we coexist with an evolved form of sentience—sensitive and emotive—like Yang in Kogonada’s After Yang or David in Spielberg’s A.I.? At this point, we cannot pretend to know the outcome, but many agree that the effect will be dramatic.

A joint study by the Ada Lovelace Institute and The Alan Turing Institute found that more than half of people surveyed in Britain had mixed to negative feelings about AI, citing concerns about privacy and information security. A similar Pew Research Center study indicates a comparable sentiment in the United States. More than any revolutionary technology in the past, AI—autonomous and adaptive—presents tough questions. Yet it also opens new frontiers of understanding and interaction previously unimaginable. One such frontier is in the research fields of bioacoustics, phytoacoustics, and ecoacoustics, all of which focus on the study of sound and acoustic communication in animals, plants, and entire ecosystems.

Can artificial intelligence really help us communicate with other organisms?

Listening to the Other: Decoding Animal Communication

Among the technological inventions of the World War I era was the hydrophone—a simple piezoelectric microphone specialized for listening to sounds underwater. Hydrophones were essential for locating submarines in the vast ocean. In 1916, the German UC-3 became the first submarine to be detected and sunk using a rudimentary tube-amplified hydrophone. Over time, hydrophones improved and remained a staple of maritime military arsenals. By the Cold War, parts of the ocean were under constant surveillance by top-secret hydrophone arrays designed to detect passing Soviet submarines. Yet sometimes, these microphones also picked up other oceanic sounds.

Roger Payne

Roger Payne

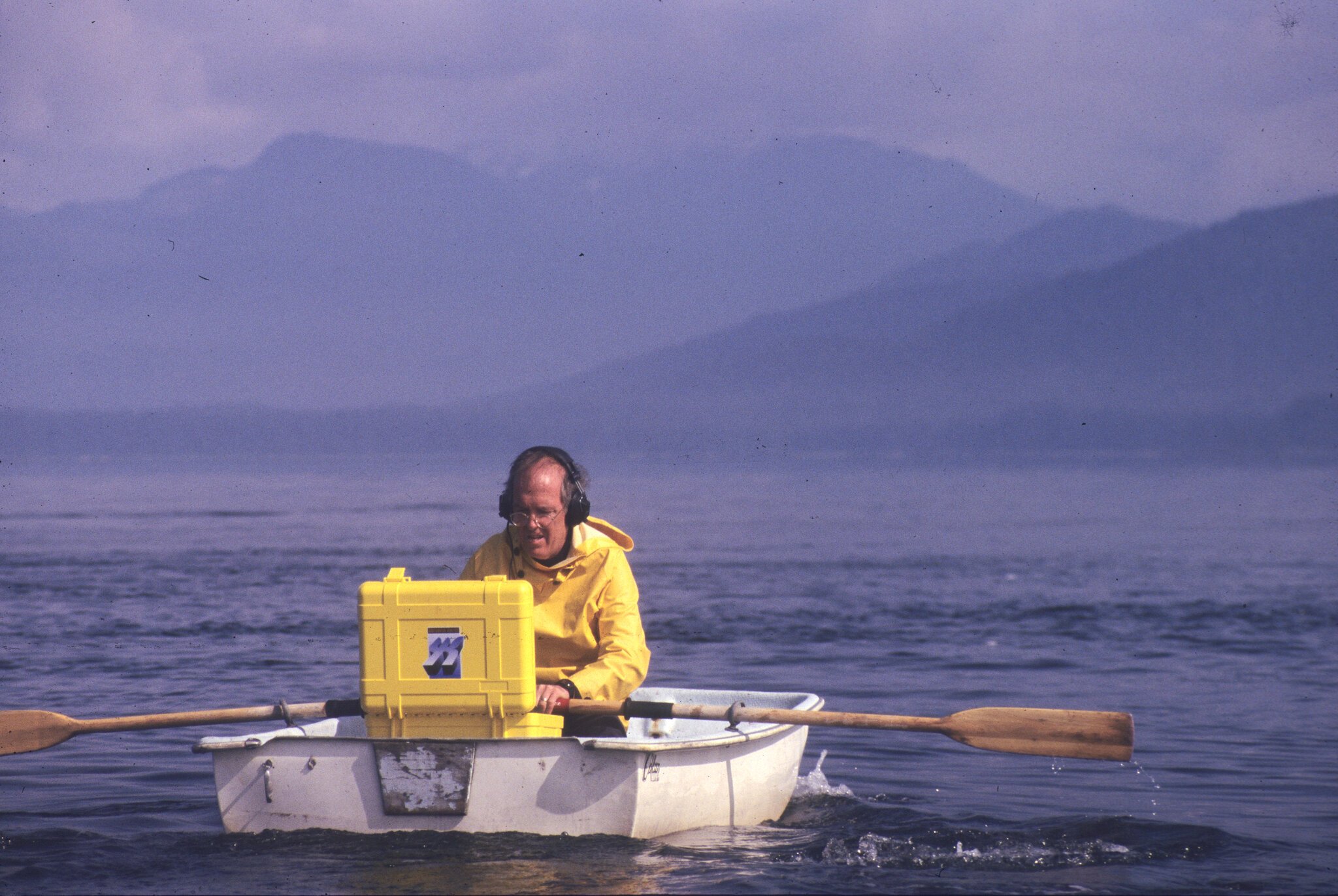

One person who noticed these mysterious sounds was Frank Watlington, a U.S. Navy engineer stationed near Bermuda in the 1960s. Watlington, with access to a single high-resolution hydrophone from an array, began to hear unusually complex and eerily melodious sounds emanating from the ocean depths. He started recording them. Observing correlations between these sounds and passing humpback whales, he grew curious.

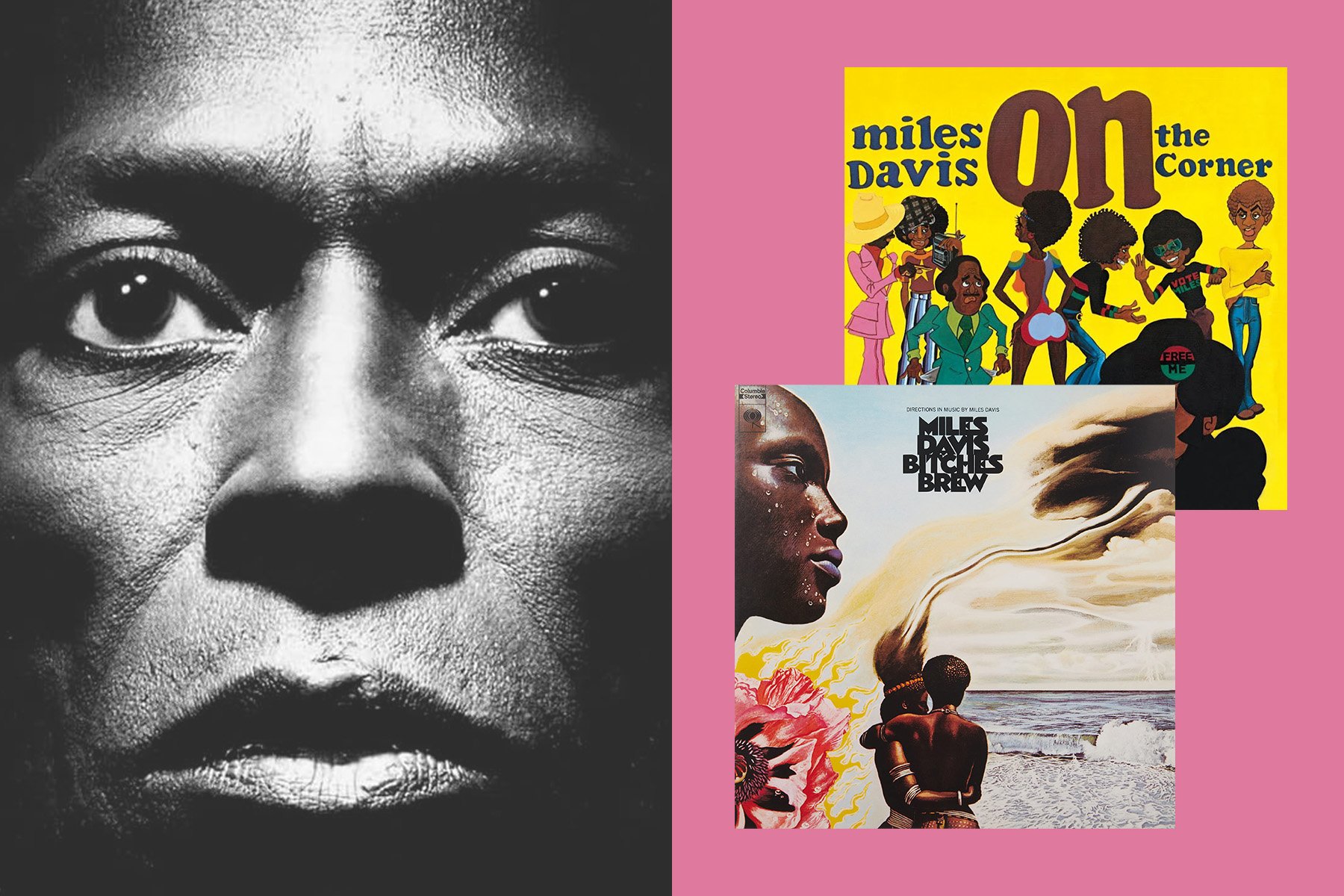

News of Watlington’s discovery reached two young researchers, Roger and Katy Payne. Moved by a personal experience of encountering a breaching whale and by a brief recording of right whale sounds, the Paynes were on a mission to change public perception of whales—then largely shaped by the whaling industry. They aimed to show whales as sentient, intelligent, and complex beings, and their haunting vocalizations as a gateway into people’s hearts. Unsurprisingly, when the Paynes heard about Watlington’s recordings, they eagerly sought him out.

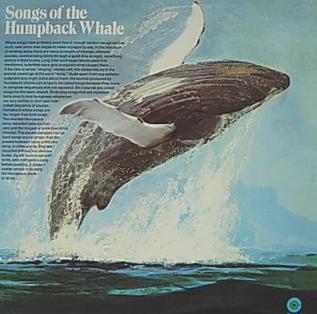

Concerned his recordings might be misused by whalers, Watlington entrusted them to the Paynes, who spent months obsessively analyzing the tapes. They had an epiphany: the whale sounds contained repeating structures, like a form of music. The Paynes enlisted collaborator Scott McVay, who helped transform the sounds into spectrographic images, confirming patterns in the humpback whale songs. Additional discoveries followed. Some whales sang the same songs, which evolved and changed year after year. The public was captivated. The 1970s LP “Songs of the Humpback Whale”, featuring Watlington’s recordings, went multi-platinum, profoundly influencing the “Save the Whales” movement by shifting how people saw these ocean giants.

In 1975, Greenpeace—still in its early years—organized an expedition to the North Pacific Ocean aimed at exposing and disrupting Soviet whaling while also attempting communication with these now-famous “singing” whales. On board was artist Will E. Jackson, who brought a Serge Modular synthesizer (System #1), freshly constructed at the CalArts workshop led by Serge Tscherepnin. Their plan was to mimic whale clicks, moans, and squeals through the synthesizer, send them underwater, and hope for a response. While humans have imitated animal calls for millennia—often to hunt—this was different: it was an artistic and emotional attempt to connect rather than harm. Despite the cutting-edge nature of the endeavor, it never amounted to true interspecies communication. Still, the intent and openness to new possibilities were set.

In the 1980s, Katy Payne expanded her studies to another of Earth’s giants—elephants—amid devastating poaching practices and growing awareness of their intelligence. At the Portland Zoo, a young calf named Sunshine approached Payne with her trunk. Katy felt a low, thunderous vibration passing through her body. Familiar with whale communication, she hypothesized that elephants might be using infrasound—low-frequency waves below the range of human hearing. Returning with specialized recording equipment, she and colleagues William Langbauer and author Elizabeth Marshall Thomas captured and sped up the calls, confirming that elephants do indeed communicate in infrasonic ranges. This solved a longstanding riddle: how elephants coordinate over miles of savannah. Their rumbling messages effectively mirror the function of human “talking drums.” Over time, researchers identified calls like “Let’s go,” along with greeting and contact calls, revealing a nuanced elephant “vocabulary.”

Subsequent studies over the years have confirmed that many creatures—bats, bees, some fish, certain turtles—demonstrate sophisticated acoustic communication systems. Aquatic life, once thought silent, turns out to be rather chatty (sound travels faster in water, after all). Yet humans often fail to detect or interpret these sounds because of our own sensory limitations.

Bioacoustics as a field began in the early 20th century with Slovene biologist Ivan Regen, known for studying insect calls (notably cricket duets). It has always depended heavily on technology—microphones with sensitive frequency ranges, reliable amplifiers, and sophisticated recording equipment. Even though these technologies improved dramatically during the digital revolution, researchers still primarily relied on human perception to find patterns in animal vocalizations, which is slow and error-prone. The big shift came in the mid-2010s, propelled by breakthroughs in artificial intelligence. Computers, unlike humans, excel at analyzing large datasets and detecting subtle patterns. Today, AI offers us an unprecedented opportunity to decode and understand the communication of other inhabitants of our planet.

Recent findings are astonishing. For instance, prairie dogs, which build underground tunnel networks, have been shown to possess one of the most complex vocal systems in non-human animals. Previously, we assumed their chirps simply served as alarm calls. Biologist Con Slobodchikoff studied the animals for 30 years and discovered far more meaning in these seemingly simple calls. Using sound-analysis software and statistical methods, he found that prairie dogs have predator-specific alarm calls conveying details like size, shape, color, and speed of the threat. They also use combinatorial structures—a proto-syntax—to form new meanings, distinguish individual identities via unique calls, and even exhibit dialects in different colonies or species.

AI has also become instrumental in studying bee communication, offering insights into their intricate acoustic and vibrational signals within hives. While Karl von Frisch famously decoded the waggle dance (communicating distance and direction to food), newer research reveals bees also rely on buzzing, substrate vibrations, and wingbeat modulation for various states of urgency, coordination, and awareness of environmental changes. Machine learning systems can now classify different buzzing patterns in real-time, identifying hunger, distress, or defensive behavior. AI is also helping scientists analyze the unique vibrational “piping” signals queen bees produce to organize colony hierarchy. Moreover, experiments with robotic bees that emit synthetic signals help researchers bridge the gap between bioacoustics and behavioral ecology, potentially allowing beekeepers to interpret hive health and prevent colony collapse.

Whale song, the iconic subject of bioacoustics, is far from fully understood but has benefited enormously from AI. The Cetacean Translation Initiative (CETI), launched in 2020, is an ambitious effort merging machine learning, robotics, and marine biology to decipher sperm whale vocalizations. Known as codas, these click-based sequences may function like words or phrases. CETI’s strategy involves underwater recording devices, AI models trained in natural language processing (NLP), and long-term behavioral observation. The goal is to determine whether whales have a genuinely syntactic language. If successful, this could mark a groundbreaking moment in interspecies communication, bridging our cognitive divide with one of Earth’s most enigmatic creatures.

Conclusion

From the cosmic echoes of the Big Bang to the “secret” languages of bees, whales, and prairie dogs, sound emerges as a fundamental bearer of information. Any given sound is essentially a code, compressing multiple layers of data into a traveling wave that becomes meaningful in the right context—and with attentive listening. Most of us who studied music recall the need to learn to listen for specific nuances in a piece, aiming to understand and replicate what we hear. While music affects us directly on an emotional level, recreating that effect consistently demands decoding sounds into symbols and structures—a process we also apply to language.

Though digital technology is often blamed for tuning us out of the environment, social media’s global interconnectedness has not necessarily closed cultural gaps or brought the world closer together. Now, with artificial intelligence, we stand at a new threshold: for the first time, we may be able to extend our “conversation” beyond the human sphere—decoding the languages of animals, and perhaps even plants, in ways previously unimaginable. Bioacoustics, ecoacoustics, and phytoacoustics—once limited by human perception—are being revolutionized by AI’s pattern-detection prowess. With every scientific advance, we peel back further layers of meaning, revealing a living world full of voices we have yet to truly understand. This newfound knowledge can change us, encouraging deeper empathy for the biosphere and its many lifeforms.

Still, we must stay mindful of the ethical dilemmas that powerful technology can pose. Understanding other organisms should not be used to manipulate them. Our capacity to hack and decipher communication systems across species should be directed toward conservation and biodiversity, not exploiting lifeforms for commercial gain or further disrupting fragile ecosystems.

As AI grows ever more powerful, the essential question will not be what it can do, but what it should do. If artificial intelligence enables us to communicate across species, it will be a test not so much of machine intelligence, but of our own maturity, collective wisdom, and willingness to listen. Standing on the threshold of an entirely new form of connection with the living world, what we do next will shape the future—not only of AI, but of other organisms and our own role within Earth’s intricate web of life.