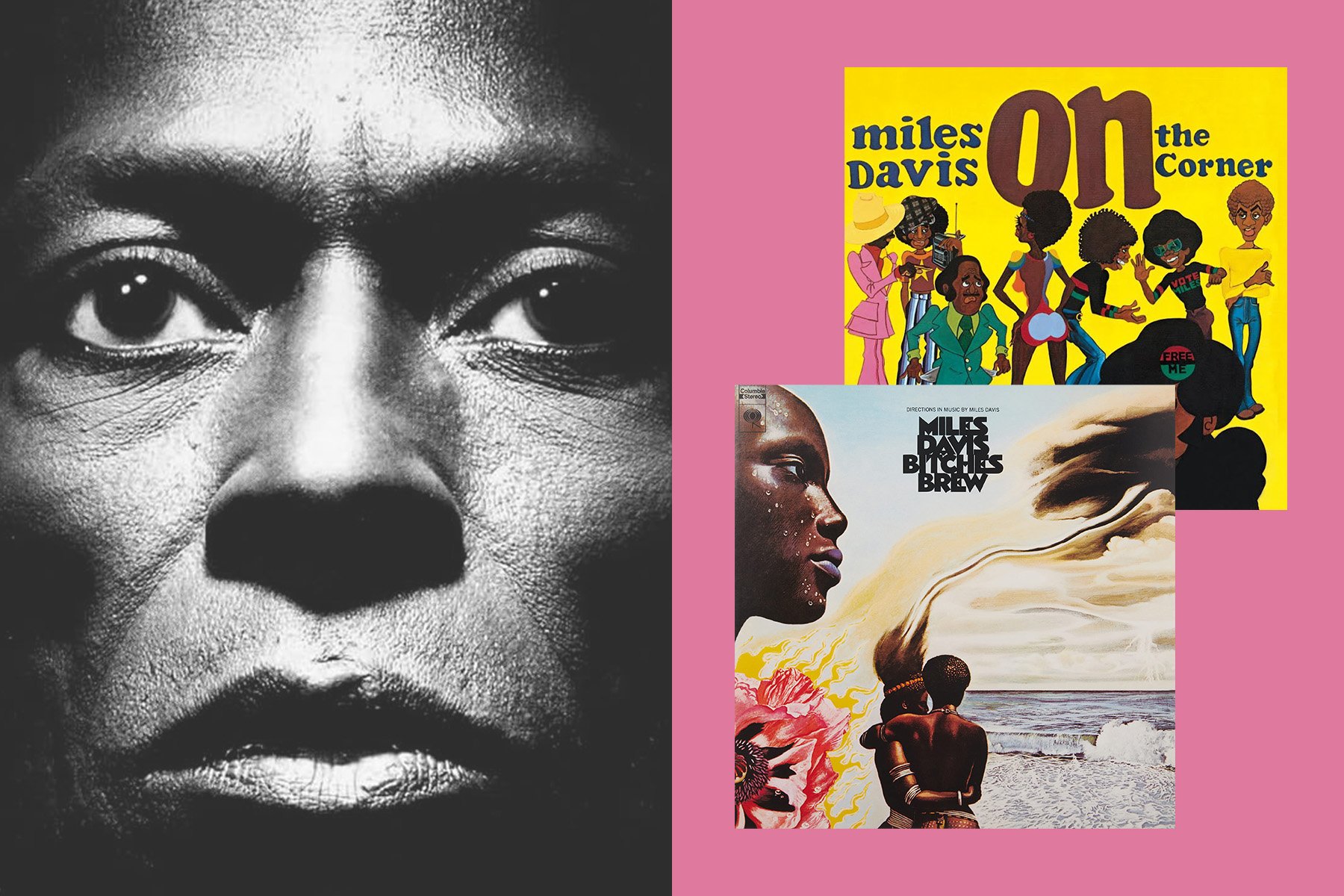

Aspiring electronic musicians often wonder whether a background in music theory is a necessary—or at least useful—prerequisite for their creative journey. While some knowledge of music theory, music history, the physics of sound, and perception can certainly be helpful and inspirational, there are many reasons why a deep knowledge of music theory isn't at all necessary for producing exceptional electronic music. In fact, the history of electronic music has been deeply influenced by utopian philosophies around sound creation that explicitly sought to distance electronic music from older forms of music making.

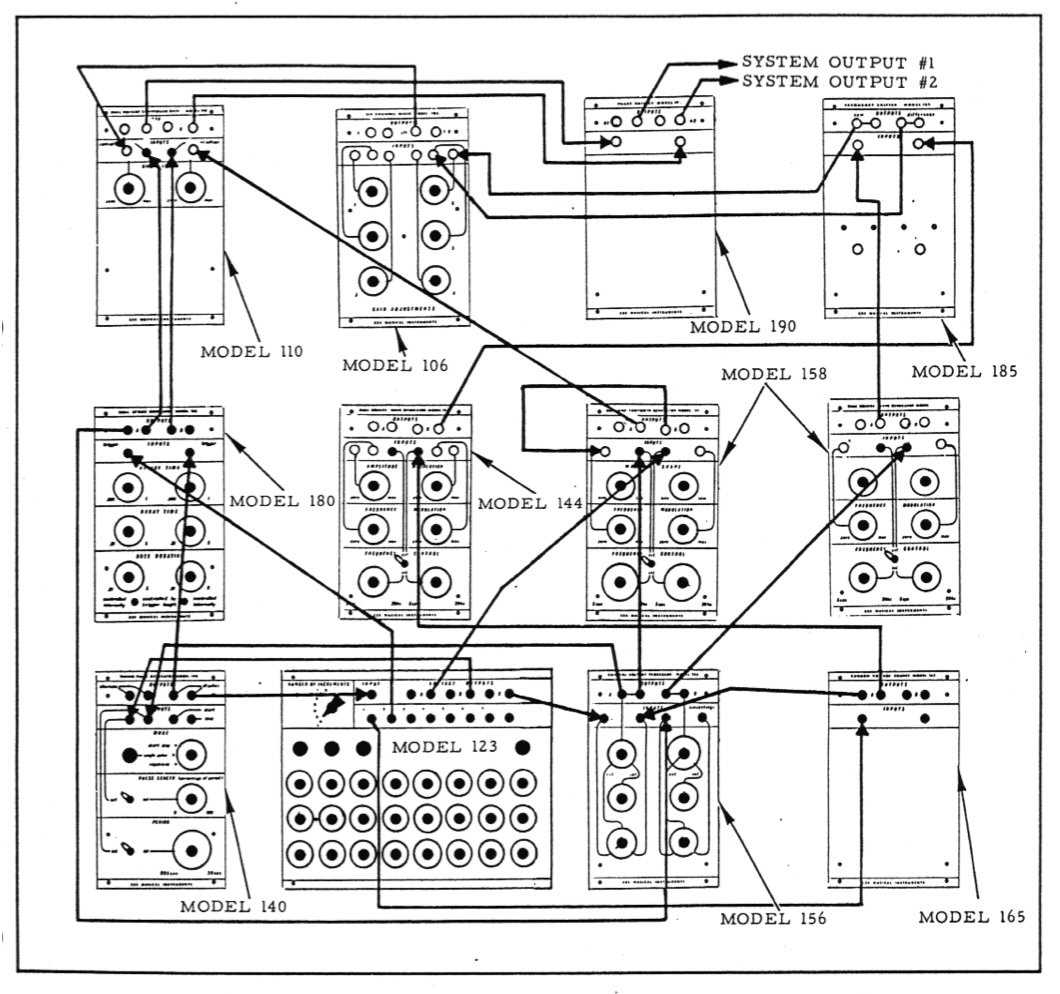

Many early synthesizer artists and manufacturers were fascinated by the possibility of creating new forms of music never before heard on earth. For example, the Buchla Modular Electronic Music System, created by Donald Buchla in the 1960s, reflected a radical departure from conventional music-making. Buchla's instruments were designed with the intention of generating sounds that were unimaginable in the realm of traditional music. Unlike other electronic instruments that emulated the familiar layouts of keyboards, Buchla's early instruments intentionally avoided such familiar interfaces and encouraged experimentation with sound itself through new controllers, such as capacitive touch plates, fostering a non-traditional, exploratory approach to music composition.

[Above: a patch diagram from an early user guide for the Buchla 100 Modular Electronic Music System.]

Recently, the impact of post-structuralist philosophy on electronic music has deepened the genre's departure from conventional norms. Philosophers such as Gilles Deleuze and Félix Guattari provided electronic artists with conceptual tools to reimagine music. Their concept of “rhizomes”—decentralized, interconnected networks—reflect electronic artists’ embrace of non-linear, non-hierarchical approaches to composition and production. Similarly, Jacques Derrida's deconstruction theory has inspired musicians to dismantle conventional musical elements, challenging norms and expectations and even inspiring new genres of electronic music such as Hauntology.

That said, some electronic music has always drawn on older forms, and a basic knowledge of music theory can certainly inspire innovation. Understanding the accumulated wisdom of centuries of music making cannot fail to enrich an artist's work, providing a broader palette from which to draw upon. In this article we’ll explore why music theory isn’t necessarily a prerequisite for creating electronic music, but we’ll also touch on some reasons why it might still be useful to learn just a bit of theory (and where to start).

What is Music Theory Anyway?

In a broad sense, music theory is simply the study of the structure and practice of music. That can be interpreted to encompass innumerable musical traditions from around the world—not limited to the European harmonic tradition—and theoretically even includes electronic music as well.

For most people, music theory probably brings to mind common-practice chordal harmony, a set of “rules” that arose out of the European polyphonic and contrapuntal tradition. While harmony is just one aspect of music theory, it is true that music pedagogy has often focused on this aspect of music theory almost to the exclusion of other subjects.

Harmony, with its roots in European classical music, forms the largest part of most music theory courses and is the basis of most styles of popular music and jazz. But ironically, many early European composers didn’t understand music theory in a modern sense! Composers in the Renaissance and Baroque periods (roughly 1400–1600 and 1600–1750 respectively) tried to ensure that the various instruments or voices in an ensemble followed melodies that were roughly stepwise, avoided large intervallic leaps, and emphasized neither unresolved dissonance nor prolonged parallel perfect consonances. These contrapuntal principles (later codified and explained post hoc as species counterpoint) were not widely thought of as “chords” at the time, but were instead conceptualized as harmony resulting from polyphony. The modern understanding of chords labeled by chord symbols, with a certain typical order and grammar, arose later to explain the harmonic practices that had developed over centuries.

It’s no coincidence that a chordal harmonic conception of music arose alongside the development of keyboard instruments such as the harpsichord, organ, and piano. A keyboard naturally suggests a mental model in which music is understood in terms of discrete chords, because its layout makes it possible to visualize and produce chords and harmonic progressions with relative ease. But there’s scant evidence that chordal harmony has a universal basis in perception. Music cultures around the world have created their own classical music traditions with their associated rules and value systems, and while polyphony apparently arose independently in many parts of Europe, Africa, and Oceania, not every music culture hears dissonance and consonance—tension and resolution—in the same way. Research with isolated Amazonian societies has shown that the perception of consonant intervals as pleasant and dissonant intervals as harsh seems to be largely a matter of acculturation. In other words, what sounds “right” in music is probably largely a matter of what you’re accustomed to hearing.

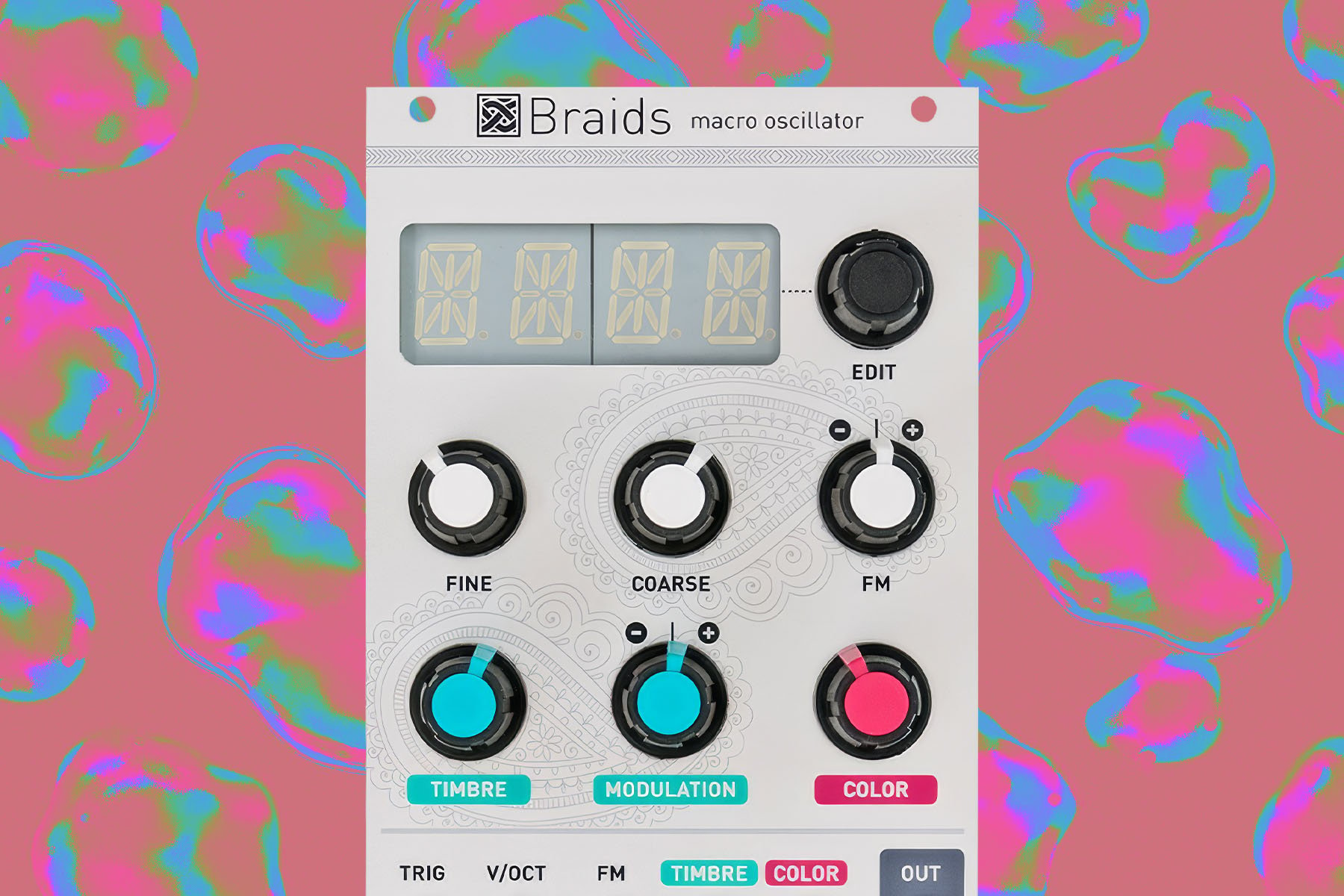

This has important implications for the place of music theory in electronic music. While it’s possible to make electronic music that uses common-practice harmonic conventions, including functional chordal harmony (and there are tools like the Instruo Harmonaig Chord Quantizer to help with that), we can see how even in classical music during the Common Practice Period, conceptions of harmony were themselves shaped by the instruments and technology available. In electronic music we don’t need to feel limited by the conventions of a musical style that was developed primarily around keyboard instruments. The technology of electronic music production will itself shape the development of new conceptions of harmony that reflect both the unquantized nature of voltage and the ease with which arbitrary and novel quantization can be applied.

Rhythm, a fundamental component of some genres of electronic music, frequently receives minimal attention in music theory textbooks, a situation that underscores a historical bias. Traditionally, music theory has been heavily skewed towards the study of harmony, particularly within the realms of classical and popular music, leaving the intricate study of rhythm somewhat in the shadows. This oversight reflects a European historical perspective where melody and harmony were often deemed the primary conveyors of musical expression and identity, relegating rhythm to a secondary role.

This is in stark contrast to some other classical music theories, such as in Carnatic (South Indian) music theory. In Carnatic music theory, complex systems of rhythmic strata are built up from decoupled cycles of rhythm and pulse, themselves resolving to larger theoretical rhythmic structures which lead to points of rhythmic tension and resolution.

Other topics that would typically be covered by a course in music theory include musical form, notation, scale, tuning, articulation, timbre, and sometimes, orchestration. Although a basic understanding of these ideas can inspire innovation, we need to distinguish aspects of music theory that are rooted in specific historical traditions from those that have a wider acontextual applicability. For example, a great deal of music theory scholarship is devoted to understanding historical forms, such as sonata-allegro form, and to understanding performance techniques or compositional practices that are primarily relevant to instrumental music of a certain period. It’s debatable whether a close study of these topics is even useful for creating great electronic music. Instead, cultivating a keen ear for the texture and detail of sound itself may offer more immediate and impactful benefits to contemporary electronic musicians.

Why You Might Not Need to Learn Music Theory

Electronic music is fundamentally different from traditional music genres that rely heavily on acoustic instruments and predefined harmonic structures. It often emphasizes texture, timbre, and rhythm over conventional melody and harmony. This focus on sonic qualities ahead of classical forms means that traditional topics in music theory are often less important than a discerning ear. The essence of electronic music lies in exploration and experimentation, including generative structures which are often outside the realm of established theoretical frameworks.

While artists like Aphex Twin and Autechre pushed rhythm and melody to their limits, many genres eschew even these familiar hallmarks of instrumental music. Arguably electronic music has made timbre, rather than harmony, the central pillar of composition. Whole genres such as noise, drone, and spectral music are focused on timbre—the “texture” of a sound which is our subjective impression of the built up layers of overtones together with noise and transients.

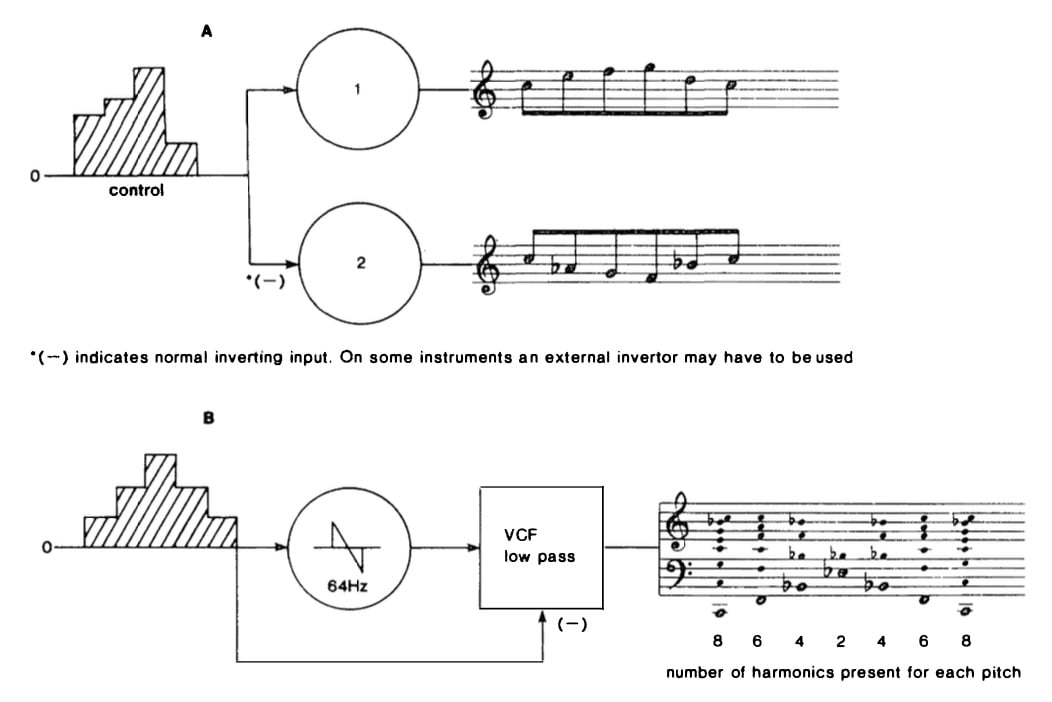

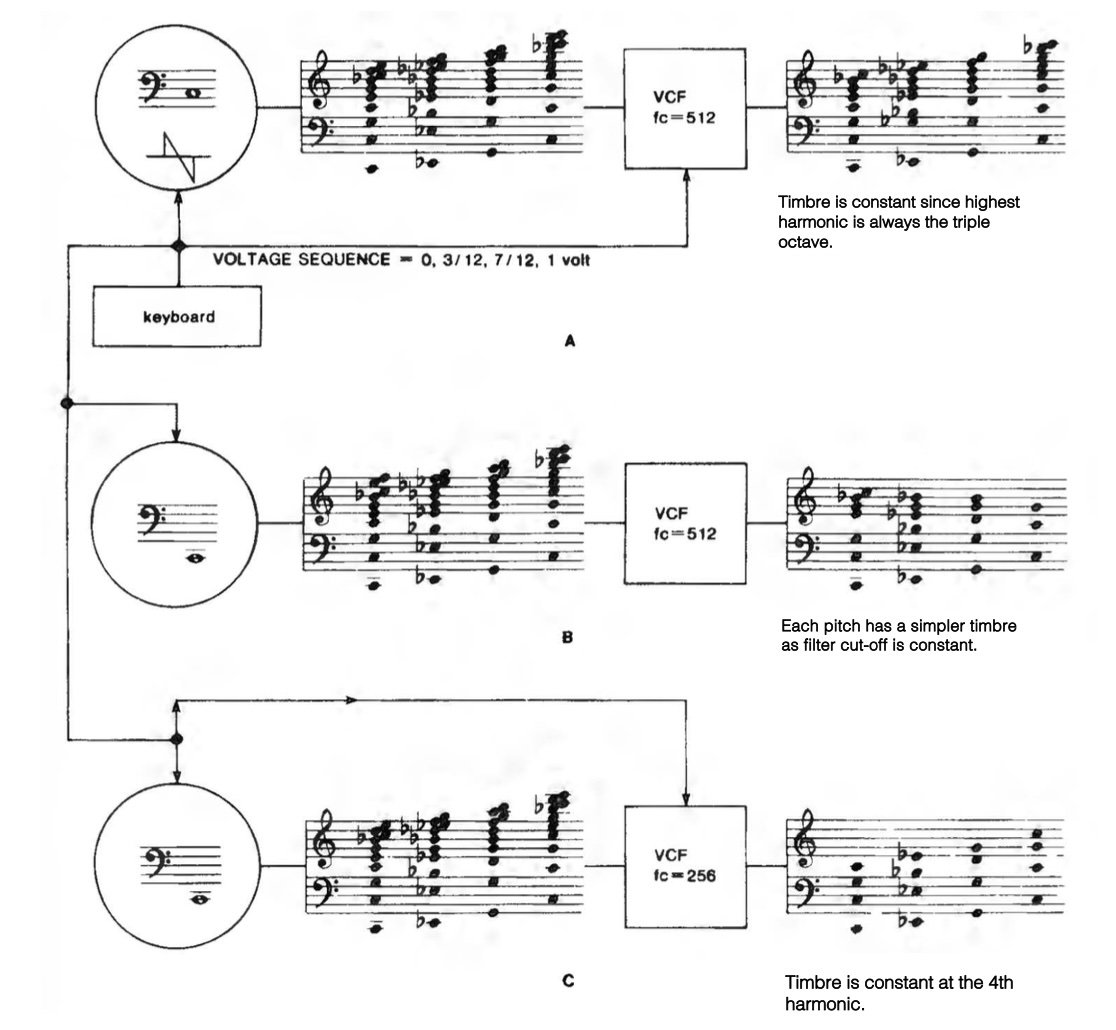

[Above: A diagram from Allen Strange's text Electronic Music: Systems, Techniques, and Controls, detailing thoughts on approaching pitch/melodic/harmonic control in the context of modular synthesizers systems.]

Classical music theory in the Euro-American tradition is largely built on the foundation of equal temperament and the division of the octave into twelve chromatic pitches, each octave doubling in frequency. This system provides the standard framework for pitch and harmony. However, in electronic music, these foundational principles become far less rigid. The nature of electronic music—with its capacity to manipulate samples or create compositions from broad-spectrum noise—often challenges the very notion of pitch as we understand it. Artists may choose to explore new scales or use pitch with no quantization at all.

Electronic music pioneer Wendy Carlos famously experimented with several non-octave-repeating scales based on just intonation but approximated by dividing non-octave intervals into arbitrary divisions. Her 1986 album Beauty in the Beast, for example, makes use of the Beta Scale (among others), which is approximated by dividing a perfect fifth into eleven equal divisions. The result is a harmonic world that feels somehow both alien and familiar.

Another difference between electronic music and other forms of instrumental music lies in the role of the artist. In electronic music, artists often embody the multivariate roles of producer, performer, and composer, a paradigm that diverges significantly from other genres, where composition, performance, and production are more often distinctly separate processes. This integration allows electronic artists to exert unparalleled control over every aspect of their music, from the initial concept to the final production. The solitary nature of this process often means that electronic artists engage deeply with the nuances of sound design and production technology, becoming experts in synthesizing, sampling, and manipulating sounds to achieve their unique sonic landscapes.

This tendency for electronic musicians to act as sole producers or lone performer-composers reflects the field’s emphasis on personal expression and technological mastery. Unlike traditional ensemble-based music, where a shared vernacular mediates the collective interplay of musicians and shapes the final output, electronic music often emerges from a distinct exchange between the artist and their audience. The compositional choices made by a Vaporwave artist, for example, refer only incidentally to a system of “music theory.” Rather, the audience understands these compositional choices as a playful recontextualization of cultural artifacts. This could be compared, for example, to the way that film directors like Quentin Tarantino play with genre, at times referencing tropes from well known genes while also subverting and commenting on genre.

Alternative Theoretical Approaches

From its earliest influences, electronic music has been guided by an alternative canon. Although impelled by exploratory and experimental impulses, electronic musicians have still optimistically sought out alternative musical theories to ground this practice.

[Above: Futurist composer/artist/theorist Luigi Russolo's intonarumori.]

In 1913, Italian Futurist Luigi Russolo argued in his manifesto "The Art of Noises" that musical sounds were too limited and that industrialisation had accustomed the human ear to a wider variety of sounds. Composers, Russolo felt, should explore the city with "ears more sensitive than eyes" and bring these sounds into the concert hall. Not having access to electronic instruments at the time, Russolo invented boxes containing various acoustic noise makers whose sounds were projected for an audience through a horn on the front of the box. He referred to these noisemakers as “Intonarumori” (Italian for “noise intoners”).

Building upon the radical vision of Russolo, French composer, engineer and acoustician Pierre Schaeffer developed musique concrète in the late 1940s, exploring the possibility of using recorded sounds as the primary material for composition. Schaeffer lacked a formal training in music (he received his diploma in radio broadcasting), and perhaps this was one of the reasons he was able to so successfully reimagine the foundations of musical sound. Schaeffer's emphasis on the "objet sonore" (sound object) shifted focus from musical notation and traditional harmonic structures to the intrinsic qualities of sounds themselves, suggesting a new way of listening to and organizing sound based on its spectra, envelope, timbral characteristics.

By the 1980s, both synthesizers and other forms of acousmatic music (music projected with loudspeakers), including tape music and computer music, were well established within the avant-garde. Music theorists sought new ways of analyzing electronic music that did not require a reliance on an identifiable sound source or performer. For example, spectromorphology, a term coined in 1986 by composer Denis Smalley, provides a framework for understanding how sound spectra change over time.

Smalley also recognized that our perception of sound is difficult to untangle from our lived experience of sound sources in the physical world. Through a concept of surrogacy, Smalley describes how even indeterminate electronic gestures can still evoke imagined, archetypes of physical movement. For example, a short, loud sound, with a percussive transient and rapid decay perhaps emulates our experience of percussion or overblown wind instruments, tapping into our experience of how these sounds occur in the acoustic world, even if the acousmatic source is unknown or unknowable.

[Above: video demonstration of a Thoresen-style spectromorphological analysis of Åke Pamerud's "Les objets obscures."]

More recently, Norwegian composer Lasse Thoresen has championed an approach to music perception and analysis termed “aural sonology.” A central concern of this project has been the “analysis of music as heard,” which contrasts with most earlier approaches to theory and analysis which prioritize notated music scores or transcriptions. Lasse Thoresen’s work builds on Schaeffer’s categorization of sounds, updating and clarifying some terminology, introducing a graphical notation for sound as heard, and introducing new analytical tools to explain emergent musical forms.

The attempt to establish a novel "music theory" of electronic sound presents an intriguing approach to unifying a wide array of acoustic experiences from the perspective of subjective perception rather than traditional notation. But the practical value of these theories as concrete creative guidelines for artists during the composition process is still in question.

What to Learn?

As an undergraduate music composition student, I had to take one course to fulfill a quantitative general education requirements. Not being especially keen on a calculus course, I signed up for a course called Physics of Music, an interdisciplinary course taught by an enthusiastic physics professor and mainly attended by lost-looking conservatory students like myself.

Although my music education involved five trimesters of music theory, as well as music history and ear training courses, I would be hard pressed to tell you the last time I made use of my knowledge of the Neapolitan chord. But, in working with synthesizers, I use my basic knowledge of the physics of music quite frequently.

A basic understanding of how vibrations pass through substances and are transduced from the air to voltage and back again is useful for recording and projecting sound. Understanding how air vibrates in closed and open tubes, and how strings and percussion instruments vibrate, is invaluable for understanding digital synthesis methods, especially physical modeling synthesis that seeks to simulate an analog sound source through a mathematical model. Understanding how we perceive sound illuminates the role of sound spectra in defining timbre and the fundamentally psychoacoustic nature of pitch perception.

A very basic understanding of these principles, which can be learned from reading a few chapters of a textbook on the physics of music, will go a long way towards building confidence in the basic techniques of electronic music composition.

Another useful skill set, which I unfortunately did not start to develop until later in life, is the capacity to hear sound and sound spectra analytically. Jason Corey, a faculty member who has taught courses in acoustics and ear training at the University of Michigan and written a book on the subject, developed a free web app called WebTET (Web-based Technical Ear Trainer) in collaboration with researcher and programmer Dave Benson. This app allows the learner to drill a series of technical listening challenges including hearing and correctly recognising the frequency and width of bands of parametric EQ, identifying the pre-delay and decay time of reverb, and discerning the attack, release, and ratio of dynamic range compression, among other skills.

The capacity for this type of technical listening can help electronic musicians to transcend dials and sliders and to rely more on their ears and less on their eyes. This shift not only cultivates an acute technical ear, but also promotes a more analytical listening approach in general, enabling electronic artists to perceive sound in its abstract form in addition to and apart from its source and purpose.

Conclusion

[Above: Another diagram from Allen Strange's text Electronic Music: Systems, Techniques, and Controls.]

While understanding music theory isn't necessary to explore electronic music, dismissing the value of centuries of musical wisdom would be equally shortsighted. It’s important to note, however, that inspiration can be found in the most unexpected places, and a typical course of study in music theory, focusing mostly on contemporary harmony, may not always provide the most useful or inspirational material for every artist.

For example, one of the most fascinating concepts, with relevance to contemporary electronic music, can be found in the practices of Renaissance music, specifically in the concept of Talea and Color. These terms refer to the rhythmic and melodic patterns used in the composition of isorhythmic motets (a style of vocal music from the 14th and 15th centuries). This technique involves a repeating rhythmic pattern (Talea) which is overlaid with a repeating melodic pattern (Color), but not necessarily at the same rate or length, creating a complex, evolving texture. This concept, though centuries old, resonates with the looping and sampling techniques—as well as the dichotomy between pitch and rhythmic sequencing—often explored in some contemporary electronic music, illustrating how ancient practices can still inform and inspire modern creation.

Though not directly transferable, the study of historical musical practices, and the theory behind them, can be an excellent source of inspiration, inviting a fresh look at the musical practices of the past.